Hi, everyone.

I have a large object (500K vertices, 3M indicies) and I'm drawing only part of it (about 240k indicies).

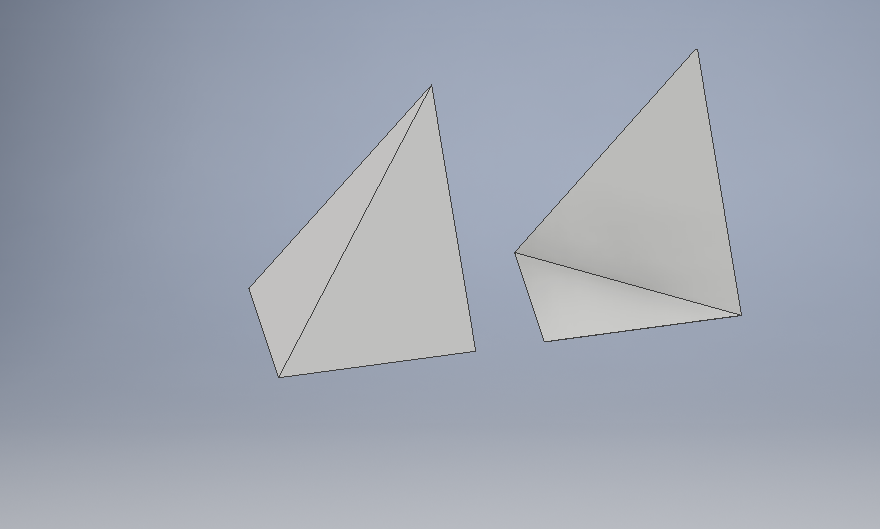

I'm selecting slices of the index buffer using my update function. I get around 1000FPS when rendering X:0-200 and Y:0-200, but rendering gets slower with rising values for either X or Y. Size of index buffer is constant, so the problem is not in the size of IBO. I used time.perfcounter() to measure the time required by the glDrawElements call. When plotted over distance from 0 it looks like this:

It makes no sense to me, i was expecting that the time needed to fetch 1st or last an element from VBO would be the same. Thus time needed to render first 240k or last 240k indicies should be the same.

I would be glad if someone could explain what is going on.

Any help is welcome.

Selection what to draw is being made using this function:

def update(self, x_from, x_to, y_from, y_to):

x_from = int(max(0,x_from))

x_to = int(min(x_to,1024))

y_from = int(max(0,y_from))

y_to = int(min(y_to,512))

partial_buffer = self.index_buffer[x_from:x_to,y_from*6:y_to*6].flatten()

self.index_count = int(len(partial_buffer))

glBindVertexArray(self.VAO)

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, self.IBO)

glBufferData(GL_ELEMENT_ARRAY_BUFFER,partial_buffer.nbytes,partial_buffer,

GL_STREAM_DRAW)

My render function:

def render(self, shader):

shader.use()

Textures.get("terrain_texture_array")

glBindVertexArray(self.VAO)

glDrawElements(GL_TRIANGLES, self.index_count, GL_UNSIGNED_INT, None)I used glFinish() before and after to make sure I'm only measuring the time of the draw call.