These days, I've been turning my attention towards AI. In the past, I spent so much time working on graphics components of the engine in the past, that I've never put the effort into building a good AI system. When I was developing Genesis SEED, I wrote a complex AI system, but it was too unwieldy and complicated so I decided to start from scratch when working on the AI system that would go into Auxnet. It's still in its beginning stages but in the long term, I'd like to release the AI system as an open source project. I want to build a general AI framework that will work on many different game types.

[/font][font=Arial]

How and why do I want to make a general AI framework? I want to build this game engine in a modular way to allow for future expansion. To achieve this modular approach, the code will naturally take a framework with attached plug-ins design. I know that AI is very game specific, but what I want to do is provide a collection of techniques that the AI agents can have to complete their task. After that, the main architectural design issues will come down to how to separation of responsibilities between the AI engine and the game engine. I'm not an AI expert, but I have experience in designing architectures and feel that I'll be able to put together a good system.

[/font][font=Arial]

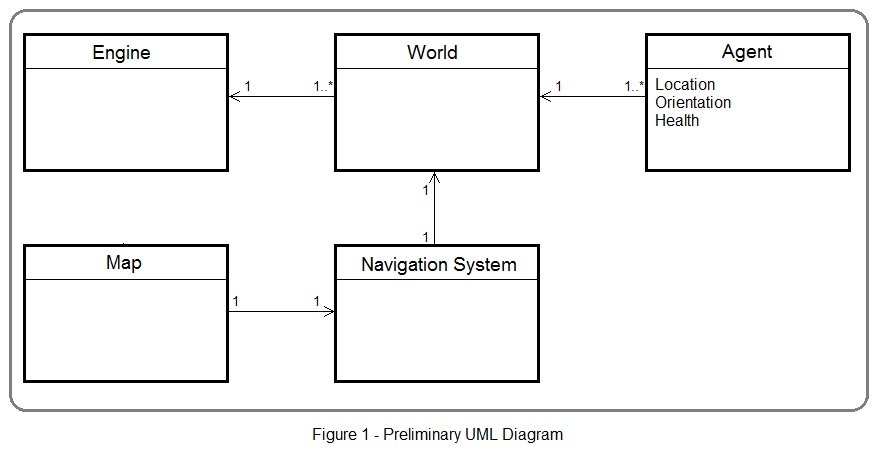

The AI system will have three main parts, the engine, worlds, and agents. The engine holds the worlds and is responsible for initialization and the destruction the system. Multithreading support will also be handled in the engine. Worlds contain agents and also the navigation system and world geometry. Keeping separate worlds will an application to use one AI engine to process the AI for agents in unrelated and unconnected areas. Agents are the actual AI entities and are connected to an entity in the game engine. There is no direct link between game engine entities and AI system agents in code so the agents will need to store copies of some of the the game engine entity's data such as position and health information. I've given a very simple UML diagram below with some of my current thoughts. This will grow by leaps and bounds by the time end of the project.

[/font][font=Arial]

[font=Arial]

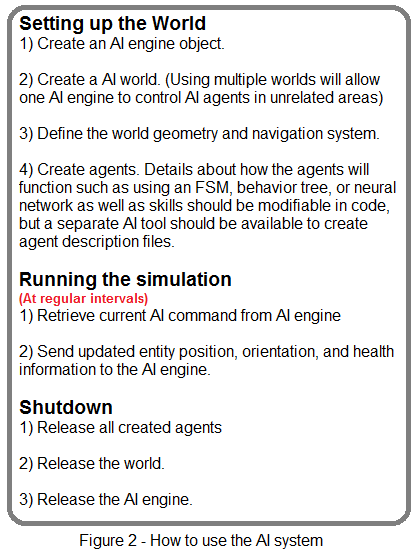

I want to model my AI system like other middleware SDKs. I've used many different kinds of middleware including, graphics APIs, physics SDKs, networking engines, and scripting languages. Of all of the different middleware libraries that I've used, which system is most like the AI engine that I want to build? Believe it or not, this system will be most similar to a physics engine. Think about it, in a physics engine, first, you should set up the world or scene, then add actors and physics bodies. After that you run the simulation, checking to see the new position and orientation of your actors and then updating them in the engine. An AI engine in many ways is quite similar. First you have to setup the world and tell the AI engine how the level is laid out and what potential colliders and dangers are in the world. Then you should create AI agents that are connected to game entities to populate the world. The AI engine will run the simulation, and then the entities in the game engine should check their AI components to see what state changes have been made. That's the entire system in a nutshell.

[/font][font=Arial]

[font=Arial]

For this to work, I'll need a very simple interface. The AI engine will be responsible for all decisions. It will tell the game entity to do simple task like move forward, turn, or shoot, and then game engine should do task and tell that AI engine when it has completed the task. The task that the AI engine will relay to the game engine should be simple enough to not need any complex intermediary AI. For example, if the AI engine returns a "go to" command, the entity should be able to go to that point by walking in a straight line and without worrying about path finding. For complex paths, the AI engine should give a series of "go to" commands until that agent has reached its destination. In this way, the AI engine will be the brains and the game engine will act like the nervous system and make the agent's body move. So the game engine to receive instructions from the AI engine, and for the AI engine to receive position information and whether or not the instruction has been completed. This information will not need to be sent back and forth every frame, but either as needed or at set regular intervals as decided by the developer. Game engines that used a component-based entity system can add an AI component to process instructions from the AI engine and to pass on the entity's location information to the AI engine. The game engine should also be able to send events to the AI engine that are not directly tied to a specific entity such as level events, so the AI agents will be able to respond to those events as well.

[/font][font=Arial]

There's no way I'll be able to program all types of AI algorithms so the AI engine will need to not only be flexible but expandable. I'll have to build it so it can take add-ons written in either C++ or in scripting languages such as AngelScript and Lua. The add-on system should not be limited to giving support for different types of levels and path finding. There should be a way to make add-ons that will affect how decisions are made so there should be add-ons for using Ad-hoc rules, finite state machines, behavior trees, neural networks, and so on. Once a large library of add-ons has been created, building complex AIs will be a matter of choosing the proper base AI architecture (Ad-hoc rules, finite state machines, etc), adding skills, and performing some game specific tweaking. These are my AI goals.

[/font][font=Arial]

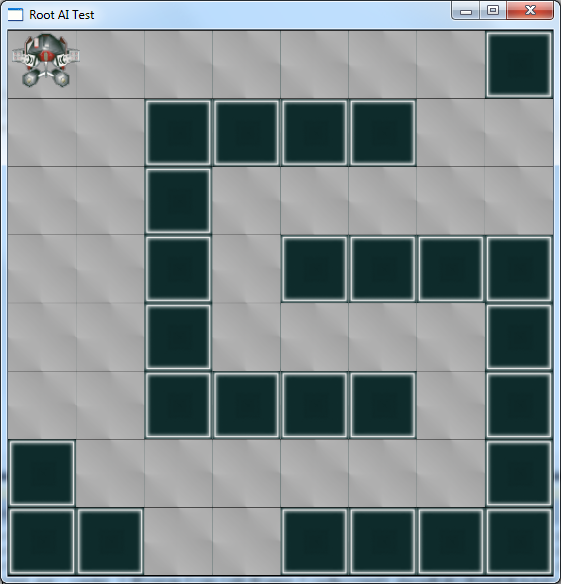

To help me build all of this, I've created a small 2D game with a little robot that should navigate through a simple maze. I'll develop this demo more and more and post updates on my blog and on YouTube.

[/font][font=Arial]

[font=Arial]

Good idea, sofar. Thought, I would always sugguest to implement a general approach in combination with a concrete usecase (a game actually). This avoids a lot of refactoring later on :D

Nevertheless, here are some thoughts from my experiences, because I use a similar system:

1. Multithreading and scripting languages like lua are really hard. Lua do not really support multithreading, therefor consider this in your design early on. Remember, that the state of a single VM can't be really shared between different threads as long as the VM doesnt support it , which is the case with lua (I use multiple stateless VMs to cover multi-core support).

2. You will need a lot of communication between engine and AI. The go-to command is a good idea and the right direction, but you will need to react to external events too. E.g. the entity movement is blocked by an other entity etc. Therefor use an asynchroniously , delayable message/event system.

3. There are some basic AI algorithm which are really performance critical and should be part of the engine:

- pathfinding (your have some navigation map/waypoint system already in the engine).

- sensory data/scanning (build a query system to send async scan requests, you will need this a lot)

- steering (your go-to command which will result in the steering of the AI, including common steering behavior).

4. It is really important for an AI to be aware of the surrounding, its state, and current events. A good way to handle this is the use of a stimuli system. External events, internal states etc. can trigger a time restricted stimuli on your entity, which will help the AI to make further decisions. Scanning the surround all the time is just to performance demanding and scanning it just every X ms is too slow. The latter will result in clearly visible delay in the decision making process.

Eg a sound from a gun could propagate a 'gun_sound' stimuli in all close npcs. The npc ai can then decide what to do with it (one ai is running away from it, an other will approach the last know position of the sound, an other entity will be more careful as long as the stimuli is active etc.)