Context

I recently started reading the book Artificial Intelligence for Humans, Volume 1: Fundamental Algorithms by Jeff Heaton. Upon finishing reading the chapter Normalizing Data, I decided to practice what I had just learnt to hopefully finally understand 100 % of a special algorithm : equilateral encoding.

Equilateral Encoding

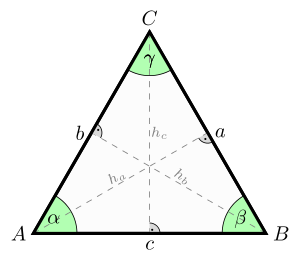

The purpose of this algorithm is to normalize N categories by creating an equilateral triangle of N - 1 dimensions. Each vertex in that triangle is a category and so, because of the properties of the equilateral triangle, it's easy to construct this structure with medians of 1 unit.

For selecting a category, all you need is a location vector of N - 1 dimensions that's ideally inside the equilateral triangle. For example, consider the vertex C and point c. The vertex C is 100% of a specified category, whilst the point c is 0% of that category. The vertices A and B are also 0% of C's category. In other words, the further away a location vector is from a vertex along its median axis, the less it belongs to the vertex`s category. Also, if the mentioned axis distance is greater than the length of a median (all medians have the same length in an equilateral triangle, which is the range of values in this context), then the location vector absolutely does not belong to the specified category.

The reason why we just don't select directly a category is because this algorithm is originally for machine learning. It quantifies qualitative data, shares the blame better and uses less memory than simpler algorithms, as it uses N - 1 dimensions.

The Application

First of, here's the link to my application : https://github.com/benoit-dubreuil/image-recognition-by-equilateral-encoding

How It Works

To be able to know the similarities between two images, a comparison must be done. Using the equilateral encoding algorithm, a pixel versus pixel comparison is the only way to do so and thus shapes do not count towards the similarities between the two images.

What category should we use to compare images? Well, colors of course!

Here are the colors I specified as categories :

- Red

- Green

- Blue

- White

- Black

The algorithm will select the closest category for each pixel according to the differences between both colors and save it's location vector in an array. Then, when both images are loaded, compare each image pixels against each other by computing the median distance, as mentioned before, between both of them. Simply project the first pixel's vector onto the other one and compute the Euclidean distance to get the median distance.

Sum all computed median distances

This way, it would also works if there were location vectors not totally on a vertex, even though it doesn't happen for the just described comparison algorithm.

Preliminaries

Of course, this can be really slow for immense images. Downscale the images to have a maximum width and height, 32 pixels in my case. Also, remove the transparency as it is not a color component in itself.

If both images do not have the same aspect ratio and so not the same downscaled dimensions, then consider using extremely different location vectors and so just different categories for "empty" pixels against non-empty pixels.

The Code

The equilateral encoding table :

package org.benoitdubreuil.iree.model;

import org.benoitdubreuil.iree.utils.MathUtils;

/**

* The image data for the image recognition by equilateral encoding.

* <p>

* See the author Jeff Heaton of this algorithm and his book that contains everything about it :

* <a href="https://www.heatonresearch.com/book/aifh-vol1-fundamental.html">Artificial Intelligence for Humans, Vol 1: Fundamental Algorithms</a>

* <p>

* The base source code for the equilateral encoding algorithm :

* <a href="https://github.com/jeffheaton/aifh/blob/master/vol1/java-examples/src/main/java/com/heatonresearch/aifh/normalize/Equilateral.java">Equilateral.java</a>

*/

public class EquilateralEncodingTable {

// The algorithm with only one category does not make sense

private static final int MIN_CATEGORIES_FOR_ENCODING = 2;

private int m_categoryCount;

private int m_dimensionCount;

private double m_lowestValue;

private double m_highestValue;

private double m_valueRange;

private double[][] m_categoriesCoordinates;

public EquilateralEncodingTable(int categoryCount, double lowestValue, double highestValue) {

if (categoryCount < MIN_CATEGORIES_FOR_ENCODING) {

throw new ArrayIndexOutOfBoundsException("Not enough categories for equilateral encoding");

}

this.m_categoryCount = categoryCount;

this.m_dimensionCount = computeDimensionCount(categoryCount);

this.m_lowestValue = lowestValue;

this.m_highestValue = highestValue;

this.m_valueRange = highestValue - lowestValue;

this.m_categoriesCoordinates = computeEquilateralEncodingTable();

}

/**

* Encodes a supplied index and gets the equilaterally encoded coordinates at that index.

*

* @param equilateralCoordsIndex The index at which the equilaterally encoded coordinates are.

*

* @return The equilaterally encoded coordinates.

*/

public double[] encode(int equilateralCoordsIndex) {

return m_categoriesCoordinates[equilateralCoordsIndex];

}

/**

* Decodes the supplied coordinates by finding its closest equilaterally encoded coordinates.

*

* @param coordinates The coordinates that need to be decoded. It should not be equilateral it doesn't matter, as the goal is simply to find the closest equilaterally encoded

* coordinates.

*

* @return The index at which the closest equilaterally encoded coordinates are.

*/

public int decode(double[] coordinates) {

double closestDistance = Double.POSITIVE_INFINITY;

int closestEquilateralCoordsIndex = -1;

for (int i = 0; i < m_categoriesCoordinates.length; ++i) {

double dist = computeDistance(coordinates, i);

if (dist < closestDistance) {

closestDistance = dist;

closestEquilateralCoordsIndex = i;

}

}

return closestEquilateralCoordsIndex;

}

/**

* Computes the Euclidean distance between the supplied coordinates and the equilaterally encoded coordinates at the supplied index.

*

* @param coordinates Coordinates of the first n-dimensional vector.

* @param equilateralCoordsIndex Index for the equilaterally encoded coordinates.

*

* @return The Euclidean distance between the two vectors.

*/

public double computeDistance(double[] coordinates, int equilateralCoordsIndex) {

return MathUtils.computeDistance(coordinates, m_categoriesCoordinates[equilateralCoordsIndex]);

}

/**

* Computes the equilateral encoding table, which is used as a look up for table for the data.

*

* @return The equilateral encoding table.

*/

private double[][] computeEquilateralEncodingTable() {

double negativeReciprocalOfN;

double scalingFactor;

final double[][] matrix = new double[m_categoryCount][m_dimensionCount];

matrix[0][0] = -1;

matrix[1][0] = 1.0;

if (m_categoryCount > 2) {

for (int dimension = 2; dimension < m_categoryCount; ++dimension) {

// scale the matrix so far

scalingFactor = dimension;

negativeReciprocalOfN = Math.sqrt(scalingFactor * scalingFactor - 1.0) / scalingFactor;

for (int coordinate = 0; coordinate < dimension; ++coordinate) {

for (int oldDimension = 0; oldDimension < dimension - 1; ++oldDimension) {

matrix[coordinate][oldDimension] *= negativeReciprocalOfN;

}

}

scalingFactor = -1.0 / scalingFactor;

for (int coordinate = 0; coordinate < dimension; ++coordinate) {

matrix[coordinate][dimension - 1] = scalingFactor;

}

for (int coordinate = 0; coordinate < dimension - 1; ++coordinate) {

matrix[dimension][coordinate] = 0.0;

}

matrix[dimension][dimension - 1] = 1.0;

// Scale the matrix

for (int row = 0; row < matrix.length; ++row) {

for (int col = 0; col < matrix[0].length; ++col) {

double min = -1;

double max = 1;

matrix[row][col] = ((matrix[row][col] - min) / (max - min)) * m_valueRange + m_lowestValue;

}

}

}

}

return matrix;

}

public static int computeDimensionCount(int categoryCount) {

return categoryCount - 1;

}

public int getCategoryCount() {

return m_categoryCount;

}

public int getDimensionCount() {

return m_dimensionCount;

}

public double getLowestValue() {

return m_lowestValue;

}

public double getHighestValue() {

return m_highestValue;

}

public double getValueRange() {

return m_valueRange;

}

public double[][] getCategoriesCoordinates() {

return m_categoriesCoordinates;

}

}

The mathematics utilities :

package org.benoitdubreuil.iree.utils;

public final class MathUtils {

/**

* Computes the Euclidean distance between the supplied vectors.

*

* @param lhsCoordinates Coordinates of the first n-dimensional vector.

* @param rhsCoordinates Coordinates of the second n-dimensional vector.

*

* @return The Euclidean distance between the two vectors.

*/

public static double computeDistance(double[] lhsCoordinates, double[] rhsCoordinates) {

double result = 0;

for (int i = 0; i < rhsCoordinates.length; ++i) {

result += Math.pow(lhsCoordinates[i] - rhsCoordinates[i], 2);

}

return Math.sqrt(result);

}

/**

* Normalizes the supplied vector.

*

* @param vector The vector to normalize.

*

* @return The same array, but normalized.

*/

public static double[] normalizeVector(double[] vector) {

double squaredLength = 0;

for (int dimension = 0; dimension < vector.length; ++dimension) {

squaredLength += vector[dimension] * vector[dimension];

}

if (squaredLength != 1.0 && squaredLength != 0.0) {

double reciprocalLength = 1.0 / Math.sqrt(squaredLength);

for (int dimension = 0; dimension < vector.length; ++dimension) {

vector[dimension] *= reciprocalLength;

}

}

return vector;

}

/**

* Negates the vector.

*

* @param vector The vector to negate.

*

* @return The same array, but each coordinate negated.

*/

public static double[] negateVector(double[] vector) {

for (int dimension = 0; dimension < vector.length; ++dimension) {

vector[dimension] = -vector[dimension];

}

return vector;

}

/**

* Multiplies the vector by a scalar.

*

* @param vector The vector to multiply.

* @param scalar The scalar by which to multiply the vector.

*

* @return The same array, but each coordinate multiplied by the scalar.

*/

public static double[] multVector(double[] vector, double scalar) {

for (int dimension = 0; dimension < vector.length; ++dimension) {

vector[dimension] *= scalar;

}

return vector;

}

/**

* Computes the dot product of the two supplied points.

*

* @param lhsVector The first point.

* @param rhsVector The second point.

*

* @return The dot product of the two points.

*/

public static double dotProductVector(double[] lhsVector, double[] rhsVector) {

double dotResult = 0;

for (int dimension = 0; dimension < lhsVector.length; ++dimension) {

dotResult += lhsVector[dimension] * rhsVector[dimension];

}

return dotResult;

}

private MathUtils() {

}

}

The image data that shows how to encode pixels :

package org.benoitdubreuil.iree.model;

import org.benoitdubreuil.iree.controller.ControllerIREE;

import org.benoitdubreuil.iree.gui.ImageGUIData;

import org.benoitdubreuil.iree.pattern.observer.IObserver;

import org.benoitdubreuil.iree.pattern.observer.Observable;

import java.awt.*;

import java.awt.image.BufferedImage;

public class ImageData extends Observable<ImageData> implements IObserver<ImageGUIData> {

private double[][] m_encodedPixelData;

/**

* Encodes the pixel data of the supplied image data.

*

* @param newValue The new value of the observed.

*/

@Override

public void observableChanged(ImageGUIData newValue) {

if (newValue.getDownScaled() != null) {

encodePixelData(newValue);

modelChanged(this);

}

}

private void encodePixelData(ImageGUIData imageGUIData) {

EquilateralEncodingTable encodingTable = ControllerIREE.getInstance().getEncodingTable();

EquilateralEncodingCategory[] categories = EquilateralEncodingCategory.values();

BufferedImage image = imageGUIData.getDownScaled();

int width = image.getWidth();

int height = image.getHeight();

m_encodedPixelData = new double[width * height][];

for (int x = 0; x < width; ++x) {

for (int y = 0; y < height; ++y) {

int orignalPixel = image.getRGB(x, height - 1 - y);

int r = (orignalPixel >> 16) & 0xff;

int g = (orignalPixel >> 8) & 0xff;

int b = orignalPixel & 0xff;

int minColorDistance = 255 * 3;

int minColorDistanceCategory = 0;

for (int category = 0; category < encodingTable.getCategoryCount(); ++category) {

Color categoryColor = categories[category].getColor();

int colorDistance = Math.abs(r - categoryColor.getRed()) + Math.abs(g - categoryColor.getGreen()) + Math.abs(b - categoryColor.getBlue());

if (colorDistance < minColorDistance) {

minColorDistance = colorDistance;

minColorDistanceCategory = category;

}

}

m_encodedPixelData[x * height + y] = encodingTable.encode(minColorDistanceCategory).clone();

}

}

}

public int getPixelCount() {

return m_encodedPixelData.length;

}

double[][] getEncodedPixelData() {

return m_encodedPixelData;

}

}

The actual image data recognition algorithm :

package org.benoitdubreuil.iree.model;

import org.benoitdubreuil.iree.controller.ControllerIREE;

import org.benoitdubreuil.iree.utils.MathUtils;

public final class ImageDataRecognition {

/**

* Compares two images and return how similar they are.

*

* @param lhs The first image to compare.

* @param rhs The second image to compare.

*

* @return Inclusively from 0, totally different, to 1, the same.

*/

public static double compareImages(ImageData lhs, ImageData rhs) {

double meanDistance = 0;

double[][] lhsEncodedPixelData = lhs.getEncodedPixelData();

double[][] rhsEncodedPixelData = rhs.getEncodedPixelData();

if (lhsEncodedPixelData != null && rhsEncodedPixelData != null) {

EquilateralEncodingTable table = ControllerIREE.getInstance().getEncodingTable();

int maxPixelCount = Math.max(lhs.getPixelCount(), rhs.getPixelCount());

double emptyPixelMeanValue = 1.0;

for (int pixel = 0; pixel < maxPixelCount; ++pixel) {

double[] lhsVector;

double[] rhsVector;

if (pixel < lhs.getPixelCount()) {

lhsVector = lhsEncodedPixelData[pixel];

}

else {

meanDistance += emptyPixelMeanValue;

continue;

}

if (pixel < rhs.getPixelCount()) {

rhsVector = rhsEncodedPixelData[pixel];

}

else {

meanDistance += emptyPixelMeanValue;

continue;

}

double rhsDotLhs = MathUtils.dotProductVector(rhsVector, lhsVector);

double[] rhsProjectedOntoLhs = MathUtils.multVector(lhsVector.clone(), rhsDotLhs);

meanDistance += MathUtils.computeDistance(lhsVector, rhsProjectedOntoLhs) / table.getValueRange() / maxPixelCount;

}

}

return meanDistance;

}

private ImageDataRecognition() {

}

}

huh, interesting. So basically with this algorithm it is easier to compare how similar two images are as opposed to doing a direct pixel for pixel comparison?

What other things would you like to cook-up with AI?

p.s I was promised black-jack and I can't find it. Or is that the name of one of the elk?