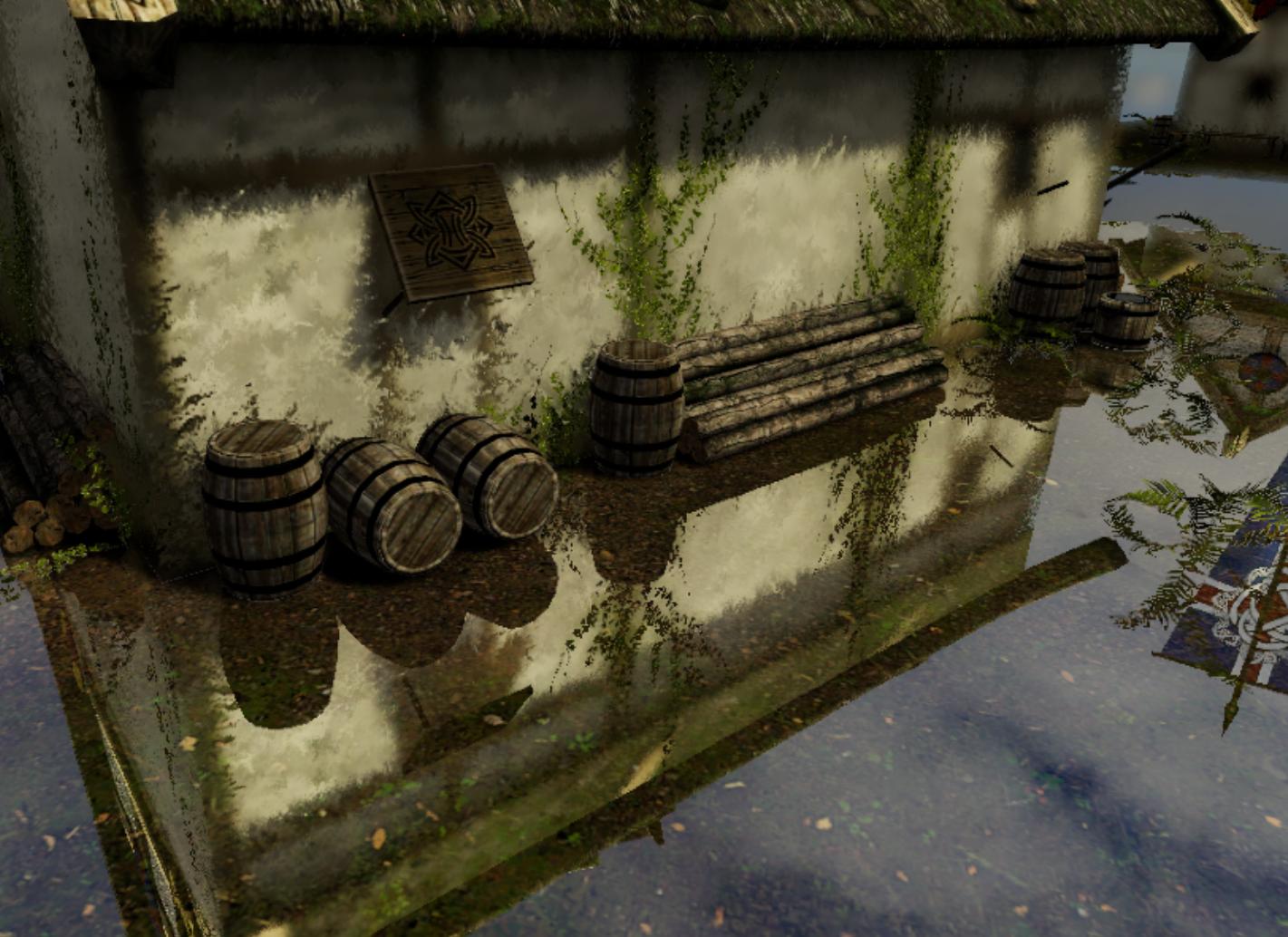

Quite a change since the last time! Added refraction material and efficient ray-traced reflection.

The RT reflection itself costs around 1.0ms @1440p with 4070Ti. Total rendering time is around 2.0ms now. Without a use of modern upscalers.

Both rendering take care of the blurry with low smoothness too. I've tried my best to balance between quality and performance.

I'll explain the method in detail below.

*Disclaimer: This is just a personal practice of engine programming. Seeking something else if you're for game developing.

Git link: https://github.com/EasyJellySniper/Unheard-Engine

Refraction material

The refraction it's relying on a background image. By distorting the screen UV coordinate (usually by a bump map) and then sampling the background image.

Which is an efficient way to achieve the goal. The key point is the “timing” to take a screen capture. Assuming the engine is doing deferred rendering.

- Capture after opaque objects are rendered - the most common way, and there are two possible place.

- After composite lighting - so the refraction material can early out in pixel shader, reuse the lighting result from opaque objects.

- After composite reflection - could introduce egg-chicken problem if you want refraction showing in reflection.

- Capture after translucent objects are rendered - likely to have egg-chicken problem if refraction objects were captured too.

- Capture after all rendering are done, include post processing - usually for the final screen distorting.

Eventually, I choose to capture after lighting composite. The next thing to do is the blurriness.

I didn't use the mipmap method at all. As the box artifacts from the regular mipmap really got me and looks ugly.

Instead, I blit the scene capture to another one with a quarter size of the current resolution. And blur it with a two-pass gaussian filter.

The blurring takes 0.07ms - 0.08ms to finish, not too critical. Then, I lerp the color between these two based on the smoothness^4. Could also be fine with smoothness or smoothness^2.

But I want it goes blurry quickly.

float3 SceneColor = UHTextureTable[GRefractionClearIndex].SampleLevel(LinearClamppedSampler, RefractUV, 0).rgb;

float3 BlurredSceneColor = UHTextureTable[GRefractionBlurIndex].SampleLevel(LinearClamppedSampler, RefractUV, 0).rgb;

float3 RefractionColor = lerp(BlurredSceneColor, SceneColor, SmoothnessSquare * SmoothnessSquare);That's basically how refraction is done.

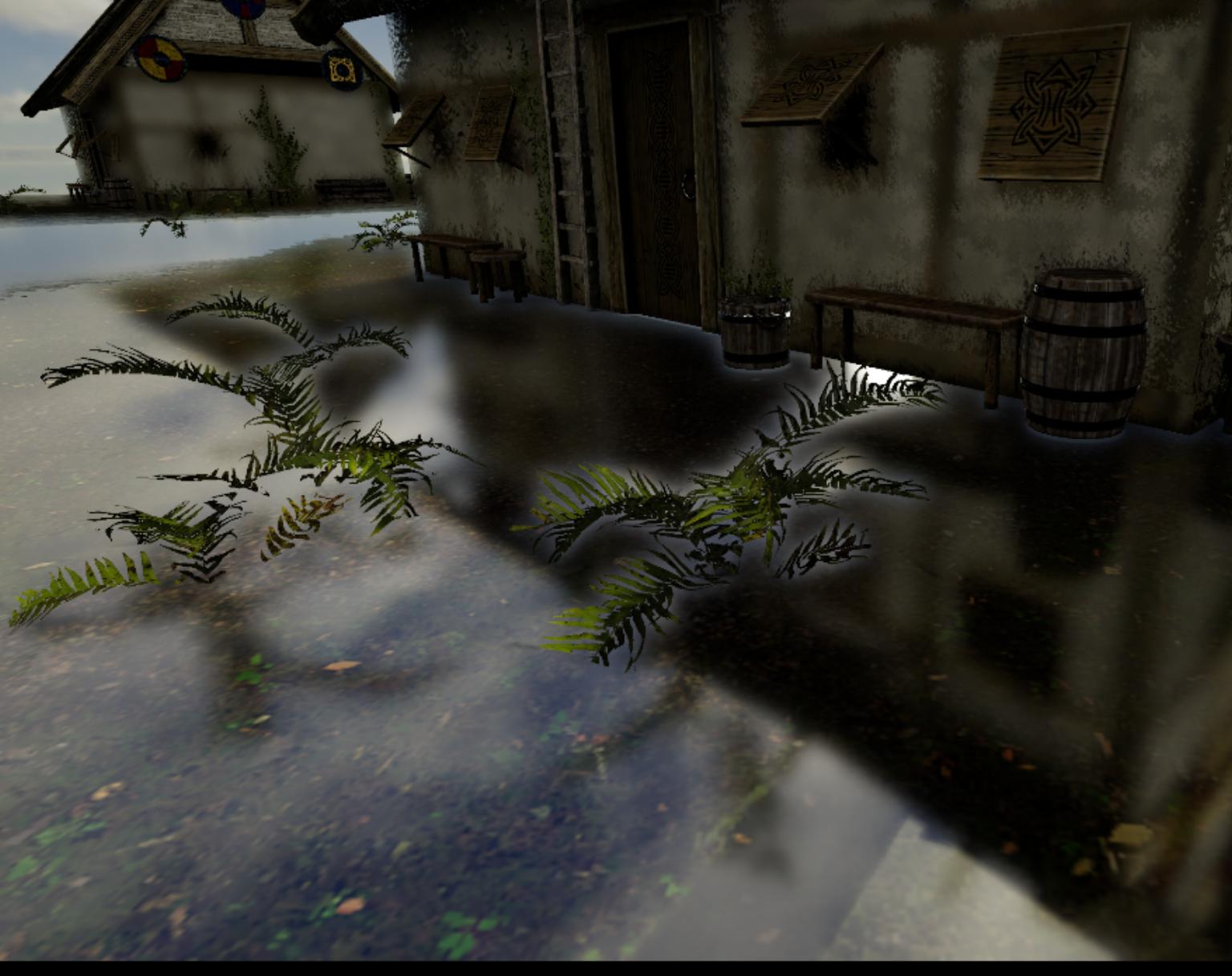

Efficient ray-traced reflection

The next exciting part is the RT reflections of course. My RT reflection takes care of both opaque and translucent objects.

I only do the full lighting calculation after TraceRay() is done in ray generation shader, instead of in the hit group shader.

The hit group shader is only responsible for material collection. So this sounds like a "ray-traced deferred rendering".

1. The hitgroup

struct UHDefaultPayload

{

bool IsHit()

{

return HitT > 0;

}

float HitT;

float MipLevel;

float HitAlpha;

// HLSL will pad bool to 4 bytes, so using uint here anyway

// Could pack more data to this variable in the future

uint PayloadData;

// for opaque

float4 HitDiffuse;

float3 HitNormal;

float4 HitSpecular;

float3 HitEmissive;

float2 HitScreenUV;

// for translucent

float4 HitDiffuseTrans;

float3 HitNormalTrans;

float4 HitSpecularTrans;

// .a will store the opacity, which used differently from the HitAlpha above!

float4 HitEmissiveTrans;

float2 HitScreenUVTrans;

float3 HitWorldPosTrans;

float2 HitRefractScale;

float HitRefraction;

uint IsInsideScreen;

};Looks scary but believe me, the size of payload doesn't affect the performance much. The most crucial factor is still the number of calls to TraceRay(), and also the calculation after hitting an object.

The hit group shader. It will only fetch reflection material either in the closest shader for opaque object. Or in the any hit shader if the closest translucent object is found (might be fine to accumulate them). And for opaque material, there is a chance to fetch the material data from GBuffer directly.

bool bCanFetchScreenInfo = bIsOpaque && bInsideScreen && (length(GBufferVertexNormal.xyz - FlippedVertexNormal) < 0.01f);

UHBRANCH

if (bCanFetchScreenInfo)

{

// when the hit position is inside screen and the vertex normal are very close

// lookup material data from Opaque GBuffer instead, this is the opaque-only optimization for preventing the evaluation of a complex material again

Diffuse = OpaqueBuffers[0].SampleLevel(PointClampSampler, ScreenUV, 0);

BumpNormal = DecodeNormal(OpaqueBuffers[1].SampleLevel(PointClampSampler, ScreenUV, 0).xyz);

Specular = OpaqueBuffers[2].SampleLevel(PointClampSampler, ScreenUV, 0);

// the emissive still needs to be calculated from material

UHMaterialInputs MaterialInput = GetMaterialEmissive(UV0, Payload.MipLevel);

Emissive = MaterialInput.Emissive;

}

else

{

// calculate material data from scratch

}Why calculating the material again if it's already in the GBuffer? Though this optimization only works for front face pixels (and opaque object ofc), it can still save up a few shader instructions.

2. The ray generation

After the TraceRay() succeeded, it can proceed to the lighting calculation. The ray generation shader.

As like my RT shadows implementation, I don't want to shoot the first “search ray” at all and shoot from the GBuffer instead.

The shader will early return if -

- There isn't any objects at this pixel at all.

- Smoothness is below a cutoff value at this pixel.

- It's a pixel discarded in half-pixel tracing.

The worst case is still, to trace the ray for all pixels. But these can at least improve average case significantly.

And in the lighting calculation, it's doing -

- Blend material info if it hit a translucent object.

- Calculate refraction when necessary.

- Calculate direct lightings.

- Calculate skylights and emissive (from material).

In the future, I'll add more stuff here.

The refraction simply takes the screen UV converted from the hit position. Which means it only supports refraction within screen range at the moment.

Same for the shadow mask, since the source of shadow mask is now from my ray-traced shadow texture.

To solve this issue, there are several ways to do it.

- Shoot another ray for shadow/refraction if hit position is outside of the screen. This can definitely solve the issue, but not the best option I'd like to use lol. My goal is to keep the tracing as efficient as possible.

- Sample from the precomputed shadow or realtime shadow maps.

- Sample from the closest cubemap probe to the camera. Since it's storing data as a cube instead of a flat image, outside of screen pixel is fine! As long as the cubemap is precise enough, I shall be able to use it as a source of refraction background with eye vector sampling.

Anyway, these are the future works!

3. Apply reflection to objects

The reflection compute shader is responsible for this. As for the translucent object, it's done in translucent shader directly.

Very straightforward:

// use 1.0f - smooth * smooth as mip bias, so it will blurry with low smoothness

float SpecFade = Specular.a * Specular.a;

float SpecMip = (1.0f - SpecFade) * GEnvCubeMipMapCount;

float3 IndirectSpecular = 0;

// calc n dot v for fresnel

float3 EyeVector = normalize(WorldPos - GCameraPos);

float3 R = reflect(EyeVector, BumpNormal);

float NdotV = abs(dot(BumpNormal, -EyeVector));

// CurrSceneData.a = fresnel factor

float3 Fresnel = SchlickFresnel(Specular.rgb, lerp(0, NdotV, CurrSceneData.a));

if ((GSystemRenderFeature & UH_ENV_CUBE))

{

IndirectSpecular = EnvCube.SampleLevel(EnvSampler, R, SpecMip).rgb * GAmbientSky;

}

// reflection from dynamic source (such as ray tracing)

float4 DynamicReflection = RTReflection.SampleLevel(LinearClampped, UV, SpecMip);

IndirectSpecular = lerp(IndirectSpecular, DynamicReflection.rgb, DynamicReflection.a);

IndirectSpecular *= SpecFade * Fresnel * Occlusion;In the RT reflection, the alpha channel stores the opactiy. So here is to blend the reflection between environment cube based on that alpha value.

But again, how did I make it blurry with low smoothness? This time, I combine the gaussian blur and mipmaps for the RT reflection texture.

The mipmaps are a good way to simulate the reflection “spreading” IMO, merely blurring it won't do the job. The blur pass is to reduce the ugly box artifacts.

But there is a problem with regular mipmap generations:

The alpha value on the boundary of traced / missed pixels are blended! Which introduced the issue.

So instead of the regular mipmap generation, I added another shader for the purpose. It's using a mixture of alpha weight and uniform weight average for different mips.

UHBRANCH

if (Constants.bUseAlphaWeight)

{

OutputColor += Color * Color.a;

Weight += Color.a;

}

else

{

OutputColor += Color;

Weight += 1;

}For a few first mips (1 to 3 for example), it uses the alpha weight average. For remaining mips, it uses the uniform average.

After the correction, it does much better:

The reason to avoid the alpha weight on low mips is also simple - to avoid a blocky mipmaps. The alpha average could introduce an image dilation side-effect.

Summary

That's it! Still much works to do. But it's one more step to perfection 🙂.

I'd like to optimize the rendering as much as I can before touching those upscalers. They should be used as a last resort instead of an excuse for being lazy.

Have you tried using ray tracing for refraction too rather than the traditional approach you described? With that you can use accurate refraction rays based on index of refraction, which can fix various artifacts surrounding refraction, especially at the edges of the screen.