Ok, makes sense now.

RmbRT said:

The thing about just using a LOD image is that if only represents magnitudes at the center of each sample. But I want the samples themselves to be irregularly distributed, so that I have peaks and valleys that are not on a uniform grid.

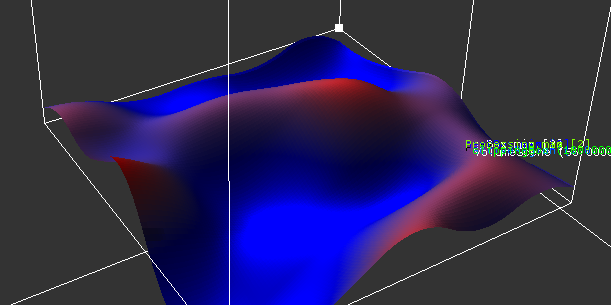

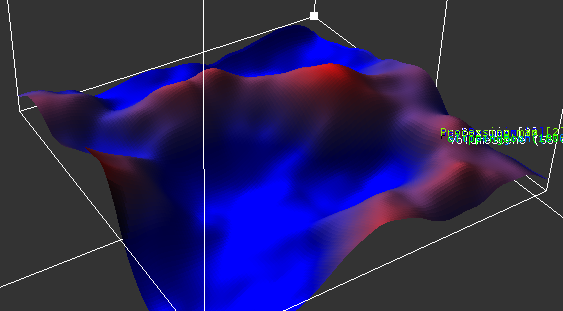

If you look at my images showing the LOD pyramid of the eroded heightmap, do you think the terrain is somehow snapped or aligned to the global grid? And does your impression change if you go down from high to low detail?

Or, for a better example: If you look at a digital real world photo, do you notice it's pixels form a grid? And if you reduce it to just 8 x 8, does it feel like the grid constraints the content of the image in any way?

If the answer to any of those questions is no (although technically it's a yes), this means you underestimate the option to generate content directly in a grid. It works well if done right, and it's by far the easiest way to do it.

Say for example we want to draw a point to the grid, but the point is at a subpixel position of (0.3, 0.8). How do we do this? Well, we can do the inverse of a simple bilinear filter, distributing the color of the point to a 2x2 region of pixels.

What i mean is: The domain, if it's regular or not, does not hinder you to express any content. An image can generate the impression of a circle, although it's domain is a regular grid. And more important: The resolution does not change this. It limits the amount of detail you can do, but you can still depict and create the same content.

I think that this approach will have different results compared to normal multi-layer noise with evenly spaced sampling & power frequencies.

But i think you actually could use multi-layer noise, and you would still get different results than other people also using multi-layer noise.

What i mean is, you seemingly ignore standard and established methods by intent. But they work. You should use them instead ignoring them, and start on top from the work your fathers already have done.

If you don't, you just come up with the same things anyway, while wasting time on reinventing wheels.

When i was mid twenty i still felt mentally immortal, having all time in the world to make my visions a reality.

But it tell you, that's totally wrong. This here is a race against the clock. Ignoring former work is a luxury you only can afford if you're already 100% sure you know a better way.

Ok, you have been warned. Now go exploring the unknown, knowing your not the first who went there… : )

But now, coming back to this:

But I want the samples themselves to be irregularly distributed

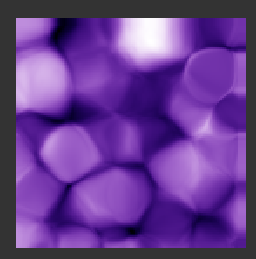

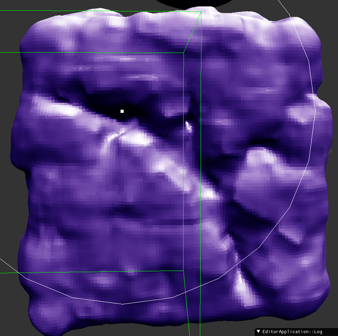

Have you considered particles? I think beside heightmaps and meshes that's the third major option, and for me personally it's the one i use the most currently. I give the particle some shape, e.g. a box or sphere, or a procedural polyhedra, but it can be a whole mesh as well. Then i voxelize those shapes to a density or SDF volume, and generate a iso surface from that. Some examples:

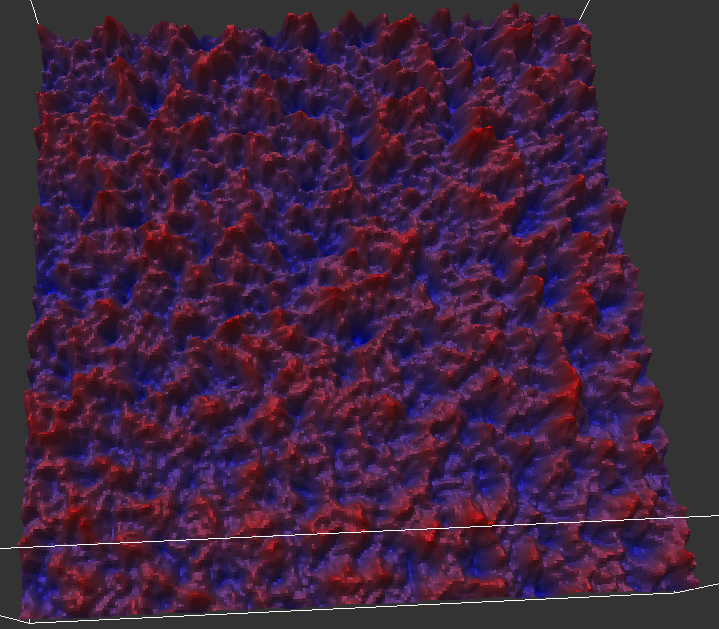

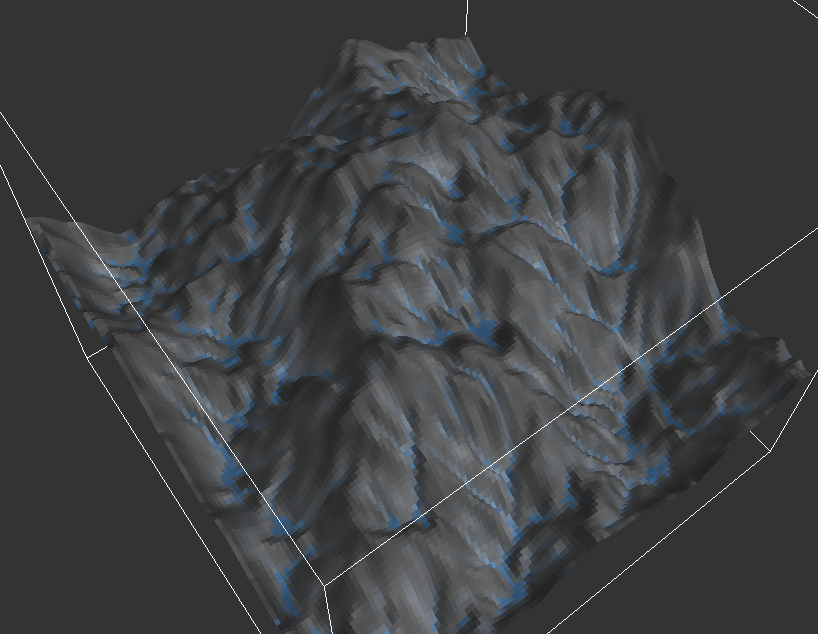

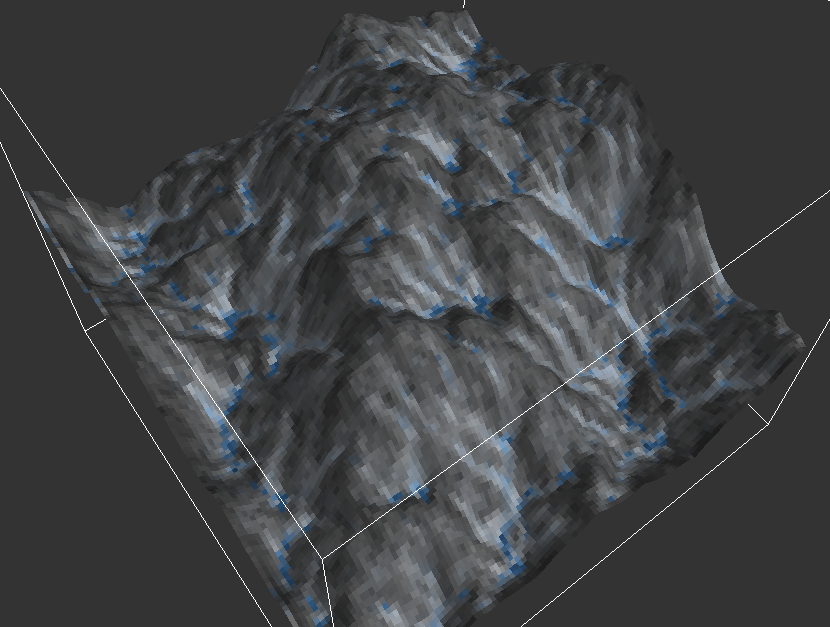

Tried to make a rock out of box shaped particles. Some iterative shrinking and constraints to adjacent particles gave the cracks.

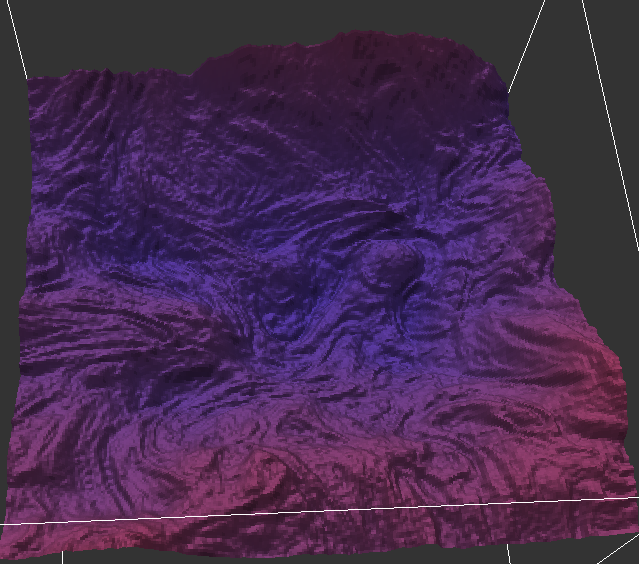

Here is a section from the fluid particles seen in the background.

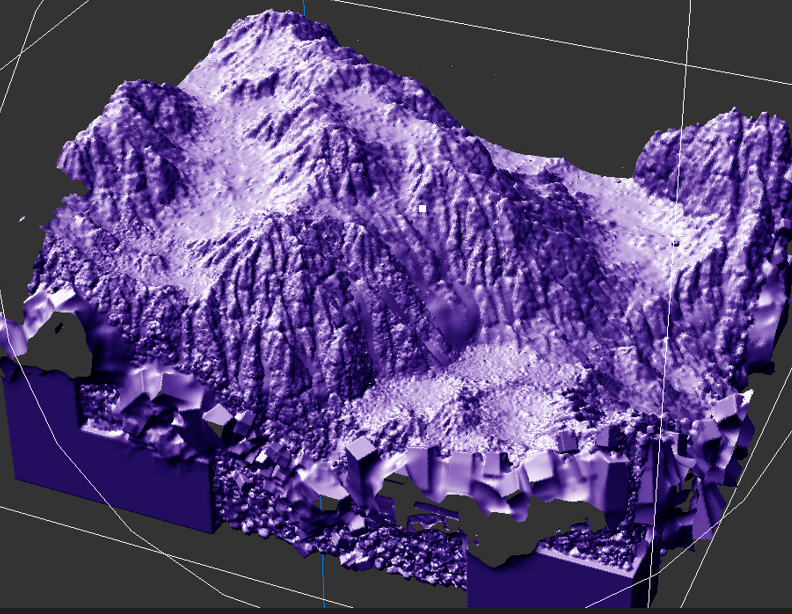

Some terrain again. But you can see the interior is modeled using larger particles to save resources, and it's all boxes, including the details on the surface.

You surely know IQ's demos showing Disney kind of scenes at high quality, modeled from SDF primitives. That's the same stuff basically.

Rasterizing such primitives to a 2D heightfield is surely an option.