Josh Klint said:

but how to improve this?

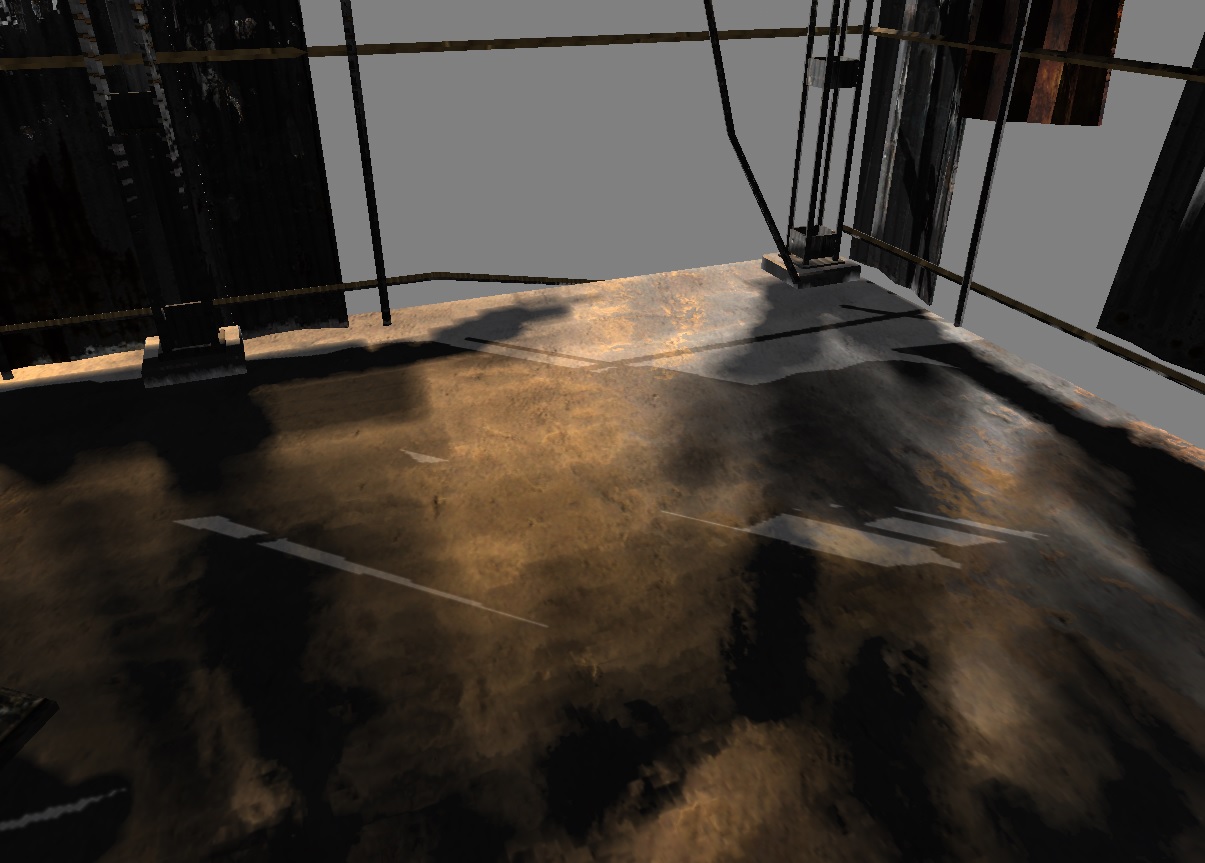

First i'd try to get rid of the banding.

The standard solution would be Monte Carlo integration of multiple samples. Which you can do temporally to still use just one sample per pixel per frame. This turns the banding into noise.

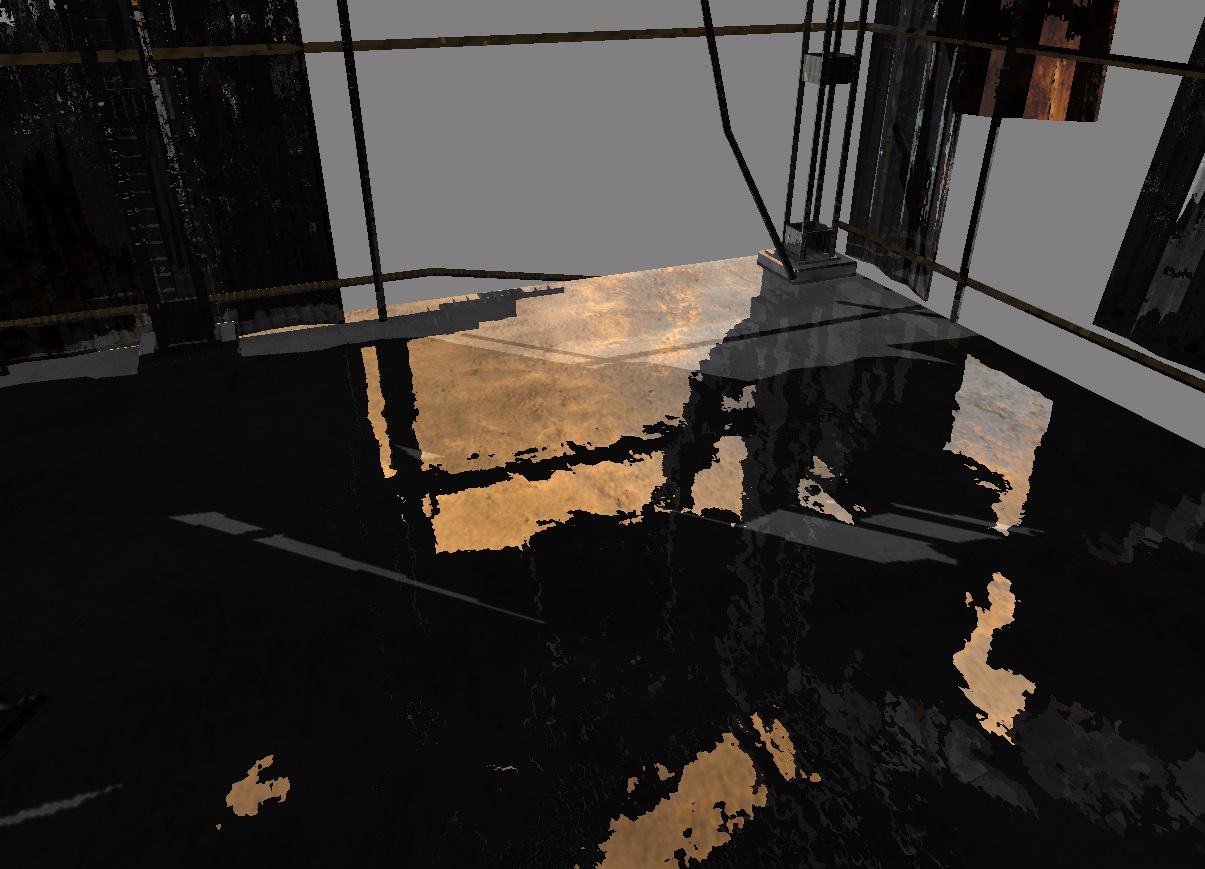

To smooth out the noise there is the option of temporal accumulation in screenspace using motion vectors and reprojection of the previous frame, which might be free if you use TAA anyway.

That's enough for sharp reflections. For glossy reflections / GI you need a more advanced denoising filter, e.g. the spational + temporal methods they use in Quake2 RTX. Has papers and source, but there were different approaches claiming better performance as well.

For a solid foundation on MC integration of BRDF material parameters you can look up path tracing. I did this once to learn about PBR. It's not hard. I could implement a simple path tracer for perfect diffuse material even without looking up any papers or tutorials.

But a standard PBR material is more difficult, as the density distribution of rays to model things like fresnel becomes complicated. And while my results looked right, i was never sure if i do it correctly. Related papers (founded mostly on the works of Veach) are a bit hard for a guy lacking math background such as me. But it's interesting and fun.

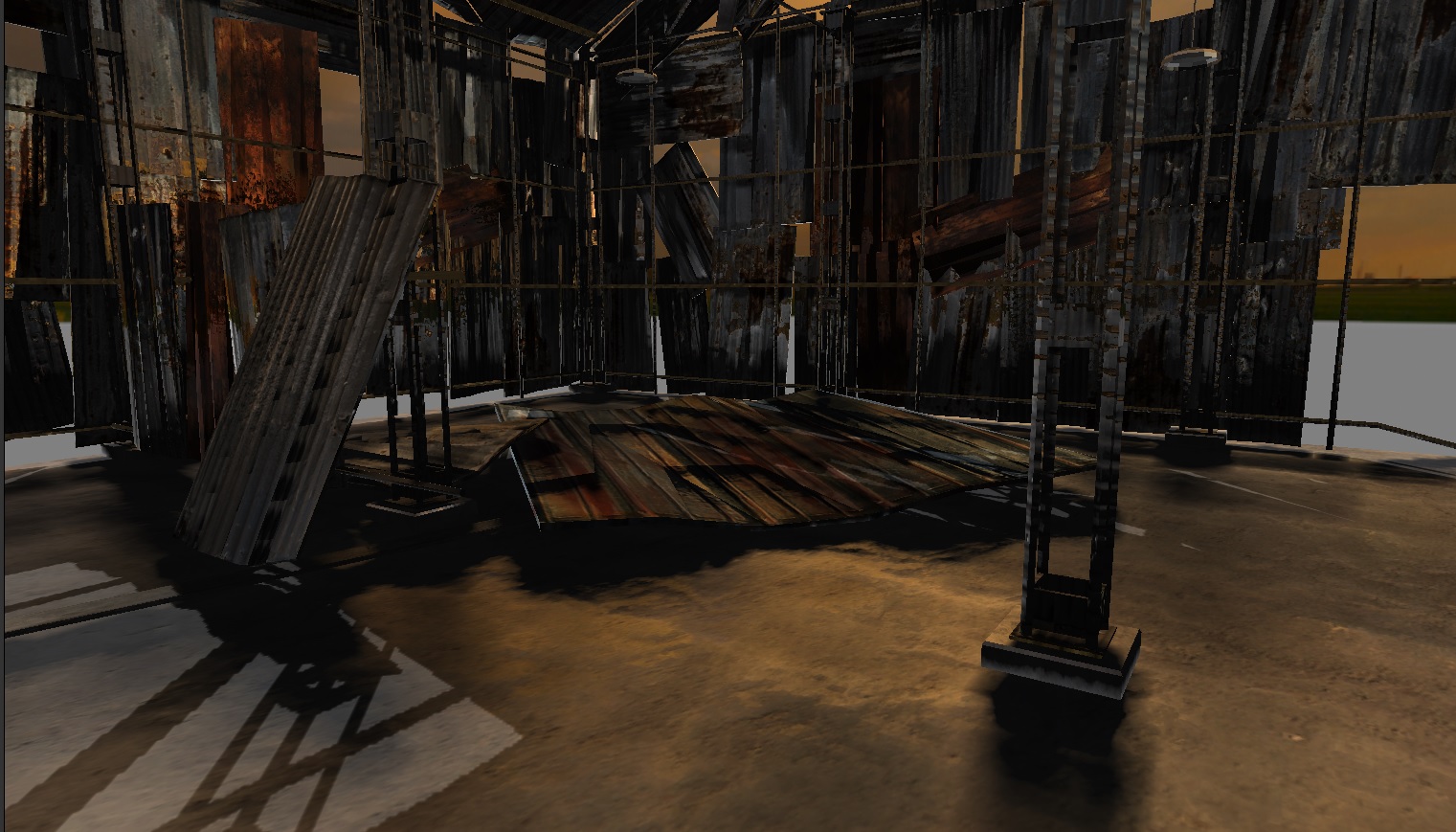

You surely need to use some tricks, as a correct modeling of a smooth material (e.g. a mirror) would still give you the banding artifacts. So you'd need to cheat by using a minimum roughness value to avoid this, and make the mirror mo0re blurry than it should be. But aside of that, you can do it correctly and avoid the typical realtime hacks of the past.

Ofc. diffuse / rough materials cause incoherent rays so performance will be worse than mirrors, which brings us to the idea of using lower resolution and upscale the results.

I can't tell much resources, but i still have this tab open with the intend to steal @mjp ‘s approximate functions which don’t need texture LUTs. Would be an example for a path tracer: https://github.com/TheRealMJP/DXRPathTracer/blob/master/SampleFramework12/v1.02/Shaders/BRDF.hlsl#L209

And this seems also a very useful reference: https://boksajak.github.io/blog/BRDF

There are also one or two free ‘Ray Tracing Gems’ Ebooks from NV which look good.