@alexpanter I considered that, but what about rendering the model every frame? It seems like the model would get drawn over every frame by the terrain.

How was Empire Earth able to render hundreds of 3D models on the screen when it had no access to shaders?

@Geri Are there algorithms for this LOD technique, or do I have to lower-poly the models myself?

yaboiryan said:

@Geri Are there algorithms for this LOD technique, or do I have to lower-poly the models myself?

The algorithm called Mesh Optimize. I dont know if there are trustable free source snippet of it online, so likely you have to come up with your own implementation. I am sure it can be done from like 50-100 lines of code.

Probably the most widely used mesh reduction methods build on quadric error metrics: http://www.cs.cmu.edu/~garland/Papers/quadrics.pdf

UE5 Nanite preprocessing uses this too afaik, for example.

But i don't think you want to do this automatically, and for a RTS, LOD should not be needed at all.

LOD means ‘Levels (plural) Of Detail’, but if your zoom factor does not change wildly, and your view is top down, you likely want only one level, to keep things simple.

This is enough because your characters will always have about the same size on screen.

So if your models have too many triangles, you get the best quality if you do the reduction manually, eventually by remodeling them. This is especially true if your models are already low poly,

Modeling tools also have built in options to reduce meshes, which you want to try first ofc. There really should be no need for you to develop such reduction algorithm yourself.

Geri said:

I am sure it can be done from like 50-100 lines of code.

No way. You need a mesh data structure with adjacency information. You need editing functions to collapse edges or vertices. You need to deal with UV islands and boundary issues. That's no easy stuff and takes serious time and effort. At least weeks just for that.

@JoeJ might i do it if i am really bored. thinking on it, it would be a handy feature in future games of me. i am pretty sure it will not take more than a few hours.

@yaboiryan If I understand your question correctly, that would be about enabling/disabling the depth test at the right times. And perhaps draw terrain first, then units. Some mixture of that should work fine.

Geri said:

yaboiryan said:

@Geri Are there algorithms for this LOD technique, or do I have to lower-poly the models myself?

The algorithm called Mesh Optimize. I dont know if there are trustable free source snippet of it online, so likely you have to come up with your own implementation. I am sure it can be done from like 50-100 lines of code.

How about this one: https://github.com/zeux/meshoptimizer ? I have never used anything like this, but it seems to have a lot of starts and forks. Also website: https://meshoptimizer.org/ .

Alexpanter: As this is probably an essential feature for him, in his place, i wouldnt trust it on such a complex third party code like this. But its his decision what he does.

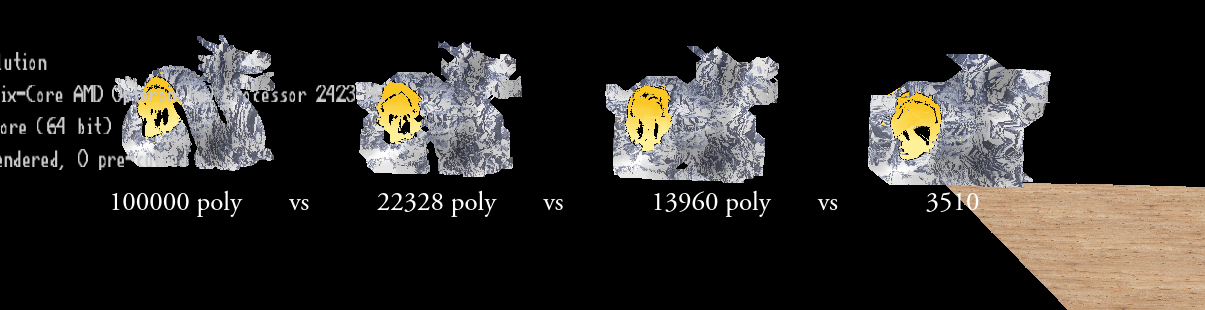

Actually i had some time today, and decided to try implementing it, to see if it really can be done within a few hours, and i was able to do it.

I have cheated a bit, as i have reused some of my old codes.

The end result is 500 lines of code (plus additional generic code later on to remove duplicated polygons and flat surfaces) however there would be room to significantly decrease that (which i will probably not do).

I am not yet satisfied with the result, because it outputs too much polygons for the given quality, probably some caves are being formed internally in the models in the process, but it does its thing.

One thing i am concerned about is the speed, this 100k poly dragon takes like 10 seconds meanwhile it should be below 0.1s according to my previous experiences. I know what i messed up, but no more time, so thats it for today.

Geri said:

probably some caves are being formed internally in the models in the process, but it does its thing.

Beside that, it also looks like merging surfaces which are close in euclidean space, but have large geodesic distance (distance over the surface).

So if i would put my right finger on my left arm, your algorithm would join my limbs to form a connected cycle, which breaks the topology of my body.

UVs are distorted and warped. There are degenerate cases breaking manifold property.

I see you can do this in short time, likely you did just cluster vertices which are close. But you can mask the issues only because the input already looks bad. No lighting, bad UVs.

If you want quality (using this in a game), or if you want to do other geometry processing tasks on the output (e.g. fixing UVs), you're in bad luck imho.

Still interesting, but that's usually just the first step on a path of failures until you would figure out something proper. Though, you could use a library as suggested above, or Blender.

That's not meant badly! But i'm convinced people need a really good reason if they intend to go down the rabbit hole of geometry processing.