I did some evaluations on the time I need to spend on AI. It is not that difficult to create AI. It’s not even difficult to create impossibly difficult AI. What is really hard, is to create interesting AI. So – I started researching neural evolution. I spent a day reading through the Neural Evolution of Augmented Topologies (NEAT) by Kenneth O. Stanley a couple of times. A paper can be found here:

http://nn.cs.utexas.edu/downloads/papers/stanley.ec02.pdf

The algorithm is not that difficult to understand, but you need a bit of knowledge about neural networks and how they work to understand this paper. Luckily, I had already taken a course in Machine Learning by Andrew Ng and Stanford University. I would really recommend this to all interested in the field.

Concretely for my case, I wanted to see if implementing this for the Moose in this game (and potentially all other species) would be a viable solution to create some interesting AI. I could then run a learning simulation for some time to create some base for these creatures, then add them with some mediocre intelligence to the version I ship to the end-users, but keep the ability to evolve the genome for the creatures alive in the game.

NEAT in short

For those who don’t know much about the learning algorithms, I just want to explain a little about how they work and the purpose of them. Neural networks are a computer model imitating the neurons, axons and dendrites that the brain consists of. Every neuron is a kind of computer that does a single calculation based on the input it gets. It then sends the calculated value to all the neurons it is connected to scaled by the connections.

Here’s a picture of a classical neural network:

This network can be trained over many iterations of measuring how far off from the goal the network performs, (called the error value or cost value). You then alter the connection values to try and better the performance for what you want. This could be looking at an image of a letter or number and trying to classify which number or letter it is. Or it could be analyzing an image of a road and training a car to drive itself. Common for all the purposes is that you have a large list of training data that tells the system how far off from the actual case the system is. The pixels of a picture here is the input values on the left, and the prediction of what the hand-written number is comes out on the right.

For the case where you don’t have any training data, you can use something like neural evolution. The requirement here then is, that you supply the system with a Fitness function. The fitness function will supply the system by awarding “points” to the fitness of the performing genome every time it has randomly evolved to do something that has made it survive.

For a very “simple” example, I’d recommend you watch Seth Bling’s version on YouTube:

Here, he creates a system to teach a computer to play Mario. The fitness function is just a value of how far over time Mario has moved. This works fine for this case. The inputs are the fields of blocks on the screen which he has classified to be two different types (standable, and enemy).

It is a rather impressive implementation.

So - an evolution algorithm works by creating a genome, mutate it to add random neurons and random connections between them, then measure how well they do before they die (or some other kill function). We then take the best few of the saved genomes and fuse them together. The fusion compares the genes in the genome and adds the matching genes from the parents with a value from either parent. It then takes the genes, that doesn’t match up, from the best performing parent (the one with the highest fitness number) and puts it into the new child.

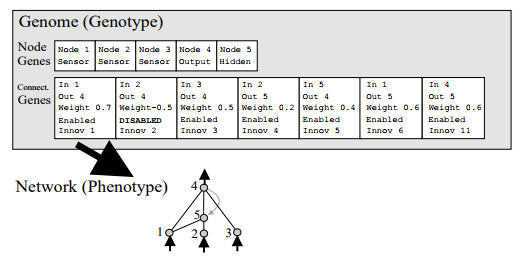

What is essential to get NEAT to work, is understanding how a genome is built up. Kenneth Stanley has some quite smart ideas on tracking where the different genes comes from. A genome here is two lists describing the neurons in the system and the connections between the neurons in the brain.

The connections have an extra feature. They have what he calls an Innovation number attached, which describes when in the simulation this connection has appeared. It is this value that is used to compare the genes when fusing the genomes.

As the simulation runs, and you breed the best performing creatures/genomes, you get better and better performing creatures. How long this simulation will take to run depends on the complexity of how you want the system to perform.

Implementing the System

Running the Neural Network

I started by planning the interface for the system that should be run.

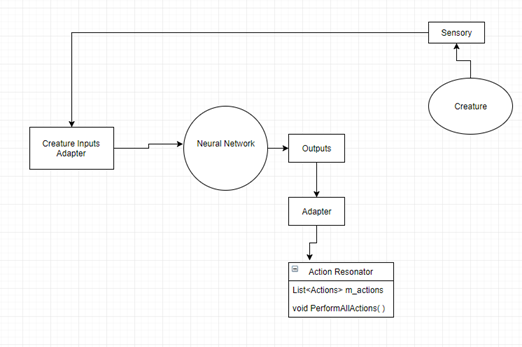

To make the evolution system generic, the “brainstem” must be defined for each creature. Analogous to the real brain, this system will need a coupling between the actual brain and the inputs/outputs that each creature can have.

The brainstem works as a relay, interpreting the signals from the body and other senses and forwards these signals to the brain. Simultaneously, these signal are dampened or enhanced depending on the severity of the signal and possibly also per specific signal. The brainstem also converts signals from the brain to nerve impulses to the body and muscles.

It is this module, that should be defined for each creature in order to make a generic learning algorithm that all creatures can be run through.

So a general model for how the brain iteration could work is something like this:

Every iteration start with the creature “Sensing” what is around it. For the Moose, I wanted to include stuff like its hunger and thirst level, because I wanted to see if it could evolve to be smart enough to find food or water when it was almost dead. I had a long list of stuff as the sensory.

The Sensory is gathered and sent to an adapter that inputs these values to the neural network. The network is iterated through and the output values is again sent to an adapter that interprets each of the output signals. The output signals are translated into actions and sent to a Resonator module. The function of this is to save the last output from the neural network and perform the given actions until the next output from the neural network comes. The reason I created this module was that I wasn’t sure my little laptop could keep up with running the network every frame. This means I can turn down the speed to something like ten times per second, but the creature would still act in between iterations.

The Evolution System

When the creatures die or are terminated for some reason, the evolution system come into play. I started by making what you could call a cradle for the evolution to start in. This was just a terrain where I put up four walls to create and area of something like a square kilometer. To start with, I made 26 copies of the same creature that was “protected” by the evolution system – meaning that when they die, it’s body isn’t deleted, only its brain. This way, I made sure that no matter how much bad luck they would have, at least 26 entities would be simulating at any given moment.

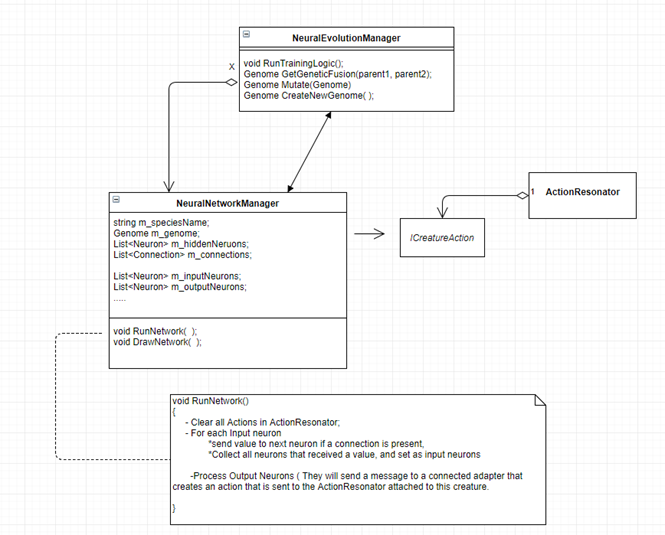

When the creatures did nothing for a wanted amount of time, I terminated their brain and created/fused/mutated a new one and injected this brain into the creature along with resetting its vitals.

The Neural Network Manager attached to each creature records the performance of the performing creature and saves its fitness number to a file when it dies. The Neural Evolution Manager is then responsible for finding the genomes for the specific creature and breeding the best of them, mutating them and instantiating the new brain and injecting it into the creature again. The same algorithm is used when a natural birth occurs, only these individuals die for real when they run out of food or water.

Presentation

For debugging any system like this, testing and unit tests are necessary. Although, with real-time simulations, you cannot necessarily test every scenario that the system will experience, mainly because it can be hard to imagine every scenario. So visualizing the actual neural network is also vitally necessary.

Here’s a video of the performing system from the beginning.

https://drive.google.com/open?id=0BxnLa_qsqQBoR3Q5S2IzS2dzRlU

And another session: https://drive.google.com/file/d/0BxnLa_qsqQBoVGpQd3ZEMTBsNzg/view?usp=sharing

What you can see is me starting by setting some parameters for the evolution system. These are values such as how long the creature can stand still and do nothing before termination or how much its fitness has to change to be recorded. There’s also a value that controls how many loop iterations the performing neural network can take. This value is needed, because two nodes can feed each other, creating an infinite loop that never returns.

The next thing that happens is… nothing. Nothing happens for the first 10-20 seconds. Eventually, a creature starts reproducing, running, turning or eating, which is awarded fitness points. So the next generation of creatures all inherit these features and half of them starts doing that.

The green orbs in the system are input values and are shown for the current creature being viewed on the left. The red orbs are the output values for the current creature being viewed. The yellow orbs that begin to appear in between are “hidden neurons”. The lines between them are connections and its scaling value is representing by how much color it is shown in (black/grey means close to zero).

Eventually they develop some behavior that gets them forward. at generation 10, they start to be able to detect obstacles in front of them, turn and conserve energy based on the steepness of the surface they stand on. This is very interesting to me. I really feel like this has great potential, once I clean up the input values and normalize them.

If any of you are interested in seeing this early build and reading the source code, I’d be happy to share it.

What's next?

I need to supply a hunter/predator to the training simulation. This is just going to be a placeholder – most likely an animated red box with the “Player” tag. This being a hunter following and killing the creature when it is close enough. This will eventually train the creatures to flee, turn or attack at the right moment so that the creature doesn’t get killed, thus survive and increase its fitness. This requires the creatures to know the relative direction to the predator, and perhaps the distance. Another critical optimization for this system is normalizing the input values to be between zero and one. Some of these values are vectors and requires vector normalization, and I fear these calculations may be hard on the system and require me to turn down the iterations per second.

I will optimize this further and begin some game tests when I have the time.

I apologize for the bad video quality. For some reason it gets worse when I upload it. I'll find another place to upload it to or try recording a better quality.