Follow up to the previous one. For my Vulkan mini-engine.

This time, I added the spot light component which benefits from light culling as well. The HDR support (HDR10 standard).

Compliation-free parameter node, that is, if the graph doesn't change at all. When modifying a parameter node, I don't need to hit the “compile” anymore. This is easy for rasterization passes and a bit tricky for ray tracing hit group shader, especially in Vulkan.

A few bugfixes and added world editor. But TBH I'm a bit tired of Win32 and planning to move forward with Dear Imgui for most GUIs in the future.

*Disclaimer: This is just a personal practice of engine programming. Seeking something else if you're for game developing.

Git link: https://github.com/EasyJellySniper/Unheard-Engine

Spot lights

Thanks to the light culling, both lighting and ray-traced shadow performance are still fine.

The culling is extremely similar as the point light culling. I can even just be lazy and use the point light culling method directly (use the spot light range as radius).

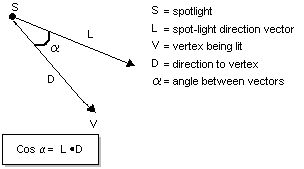

But to get better result, it's still recommended to take account the spot light cone. I made the calculation simpler:

bool bIsOverlapped = SphereIntersectsFrustum(float4(SpotLightViewPos, SpotLight.Radius), TileFrustum)

&& IsTileWithinSpotAngle(SpotLight.Position, SpotLight.Dir, SpotLight.Angle, TileCornersWorld);By combining sphere-frustum and spot light angle test, it gives me an approximate cone range.

After culling, the classic spot light formula is applied in the shader:

// ------------------------------------------------------------------------------------------ spot lights accumulation

// similar as the point light but giving it angle attenuation as well

TileOffset = GetSpotLightOffset(TileIndex);

uint SpotLightCount = SpotLightList.Load(TileOffset);

TileOffset += 4;

UHLOOP

for (Ldx = 0; Ldx < SpotLightCount; Ldx++)

{

uint SpotLightIdx = SpotLightList.Load(TileOffset);

TileOffset += 4;

UHSpotLight SpotLight = UHSpotLights[SpotLightIdx];

LightInfo.LightColor = SpotLight.Color.rgb;

LightInfo.LightDir = SpotLight.Dir;

LightToWorld = WorldPos - SpotLight.Position;

// squared distance attenuation

LightAtten = 1.0f - saturate(length(LightToWorld) / SpotLight.Radius + AttenNoise);

LightAtten *= LightAtten;

// squared spot angle attenuation

float Rho = dot(SpotLight.Dir, normalize(LightToWorld));

float SpotFactor = (Rho - cos(SpotLight.Angle)) / (cos(SpotLight.InnerAngle) - cos(SpotLight.Angle));

SpotFactor = saturate(SpotFactor);

LightAtten *= SpotFactor * SpotFactor;

LightInfo.ShadowMask = LightAtten * ShadowMask;

Result += LightBRDF(LightInfo);

}And the ray tracing shadow shader:

// ------------------------------------------------------------------------------------------ Spot Light Tracing

TileOffset = GetSpotLightOffset(TileIndex);

LightCount = (bIsTranslucent) ? SpotLightListTrans.Load(TileOffset) : SpotLightList.Load(TileOffset);

TileOffset += 4;

for (Ldx = 0; Ldx < LightCount; Ldx++)

{

uint SpotLightIdx = (bIsTranslucent) ? SpotLightListTrans.Load(TileOffset) : SpotLightList.Load(TileOffset);

TileOffset += 4;

UHSpotLight SpotLight = UHSpotLights[SpotLightIdx];

LightToWorld = WorldPos - SpotLight.Position;

// point only needs to be traced by the length of LightToWorld

RayDesc ShadowRay = (RayDesc) 0;

ShadowRay.Origin = WorldPos + WorldNormal * Gap;

ShadowRay.Direction = -SpotLight.Dir;

ShadowRay.TMin = Gap;

ShadowRay.TMax = length(LightToWorld);

// do not trace out-of-range pixel

float Rho = acos(dot(normalize(LightToWorld), SpotLight.Dir));

if (ShadowRay.TMax > SpotLight.Radius || Rho > SpotLight.Angle)

{

continue;

}

UHDefaultPayload Payload = (UHDefaultPayload) 0;

Payload.MipLevel = MipLevel;

TraceRay(TLAS, RAY_FLAG_ACCEPT_FIRST_HIT_AND_END_SEARCH, 0xff, 0, 0, 0, ShadowRay, Payload);

// .... after tracing stuffs .... //

}By far, I basically conclude 3 basic light types in the mini-engine. I'll add more details/settings in the future based on the requirements.

In brief, the tiled-based culling is the key role. It can also reduce TraceRay() calls.

HDR10 Support

It's unfortunate that I was only managed to find one monitor and test with it. So this feature is highly experimental and could be changed anytime.

I didn't label in the screenshots which one is HDR enabled or disabled. But it should be very obvious. The one that keeps more color information is HDR enabled.

In SDR, we don't have enough color depth, and bright color accumulation eventually goes white. In HDR, it's still a possible to preserve the color info with high intensity. I think I don't need to futher introduce the benefits of HDR 🙂

The steps to implement HDR10 in the Vulkan:

- Check if swap chain supports a VkSurfaceFormatKHR that has the format VK_FORMAT_A2B10G10R10_UNORM_PACK32 and color space VK_COLOR_SPACE_HDR10_ST2084_EXT.

- There is no need to check whether “Use HDR” is enabled in Win10 desktop. The Vulkan API seems to automatically enable it for us.

- Set the HDR metadata. It's recommended to enabled the VK_EXT_hdr_metadata extension as well.

The code pieces to set HDR metadata:

// HDR metadata setting

if (bSupportHDR && ConfigInterface->RenderingSetting().bEnableHDR)

{

VkHdrMetadataEXT HDRMetadata{};

HDRMetadata.sType = VK_STRUCTURE_TYPE_HDR_METADATA_EXT;

// follow the HDR10 metadata, Table 49. Color Spaces and Attributes from Vulkan specs

HDRMetadata.displayPrimaryRed.x = 0.708f;

HDRMetadata.displayPrimaryRed.y = 0.292f;

HDRMetadata.displayPrimaryGreen.x = 0.170f;

HDRMetadata.displayPrimaryGreen.y = 0.797f;

HDRMetadata.displayPrimaryBlue.x = 0.131f;

HDRMetadata.displayPrimaryBlue.y = 0.046f;

HDRMetadata.whitePoint.x = 0.3127f;

HDRMetadata.whitePoint.y = 0.3290f;

// @TODO: expose MaxOutputNits, MinOutputNits, MaxCLL, MaxFALL for user input

const float NitsToLumin = 10000.0f;

HDRMetadata.maxLuminance = 1000.0f * NitsToLumin;

HDRMetadata.minLuminance = 0.001f * NitsToLumin;

HDRMetadata.maxContentLightLevel = 2000.0f;

HDRMetadata.maxFrameAverageLightLevel = 500.0f;

// PFN_vkSetHdrMetadataEXT

GVkSetHdrMetadataEXT(LogicalDevice, 1, &SwapChain, &HDRMetadata);

}The HDR metadata setting doesn't seem to affect the rendering with the HDR monitor (LG 27 inch, can't recall the model). I'll test about this if I find another HDR monitor.

The problems after HDR is enabled

- Too bright. Need a way to lower the overall intensity.

- SRGB vs Linear issue. With SDR I let it output to R8G8B8A8_SRGB swap chain. But not it outputs to a _UNORM format. A manual gamma conversion is needed.

For now I simply apply the following calculations in the shader at a certain point:

// apply a log10 and gamma conversion

Result = log10(Result + 1);

Result = pow(Result, 0.454545f);This is just the first step of HDR support. I'll test it on more HDR monitors and refine the formula.

Compilation-Free Parameter Node

Sorry for the bad quality gif. Original link: https://i.imgur.com/TjXw7nD.gifv

In brief, generated material shader code is now like this:

cbuffer PassConstant : register(UHMAT_BIND)

{

int Node_1331_Index;

int Node_1341_Index;

int Node_1357_Index;

int Node_1363_Index;

float3 Node_55381;

float Padding0;

float3 Node_1318;

float Node_1315;

float Node_1373;

float Node_1347;

float Node_1350;

int DefaultAniso16_Index;

float GCutoff;

float GEnvCubeMipMapCount;

}

UHMaterialInputs GetMaterialInput(float2 UV0)

{

// material input code will be generated in C++ side

float4 Result_1363 = UHTextureTable[Node_1363_Index].Sample(UHSamplerTable[DefaultAniso16_Index], UV0);

float4 Result_1357 = UHTextureTable[Node_1357_Index].Sample(UHSamplerTable[DefaultAniso16_Index], UV0);

float4 Result_1341 = UHTextureTable[Node_1341_Index].Sample(UHSamplerTable[DefaultAniso16_Index], UV0);

Result_1341.xyz = DecodeNormal(Result_1341.xyz, true);

float4 Result_1331 = UHTextureTable[Node_1331_Index].Sample(UHSamplerTable[DefaultAniso16_Index], UV0);

// before the change, the parameter translation is hardcoded as:

// float3 Node_55381 = float3(0.85f,0.23f,0.61f);

// float Node_1350 = 0.87f;

// after the change, the shader reads the value from cbuffer directly

UHMaterialInputs Input = (UHMaterialInputs)0;

Input.Opacity = 1.0f;

Input.Diffuse = float3(float3(Node_1318.r, Node_1318.g, Node_1318.b).r, Node_55381.g, Node_55381.b).rgb.rgb;

Input.Occlusion = Node_1315.r;

Input.Specular = (float3(Node_1318.r, Node_1318.g, Node_1318.b).rgb * Result_1331.rgb).rgb;

Input.Normal = (Node_1315 * Result_1341.rgb).rgb;

Input.Metallic = (Node_1315 * Result_1357.rgb).r;

Input.Roughness = (Result_1363.rgb * Node_1373).r;

Input.FresnelFactor = Node_1347.r;

Input.ReflectionFactor = Node_1350.r;

Input.Emissive = float3(0,0,0);

return Input;

}When setting up the cbuffer structure, I also aware the 16 bytes rule of a constant buffer:

void ParameterPadding(std::string& Code, size_t& OutSize, int32_t& PaddingNo, const size_t Stride)

{

// this function detect before adding a parameter define, see if it's padding to 16 bytes

// that's the cbuffer rule

const size_t CurrOffset = OutSize % 16;

const size_t AfterSize = CurrOffset + Stride;

if (AfterSize > 16)

{

// need to padding

for (size_t Idx = 0; Idx < (16 - CurrOffset) / 4; Idx++)

{

Code += "float Padding" + std::to_string(PaddingNo) + ";\n";

PaddingNo++;

}

OutSize += 16 - CurrOffset;

}

}So that it won't read the wrong value introduced by 16 bytes alignment. Which is an important detail! Remember DON'T define padding parameter as float Padding[N]; Arrays will break the alignment behaviour.

And when copying the parameters to the cbuffer, simply do it with memcpy_s() and give it the proper address.

Next, take a look at the ray tracing hit group shader:

// another descriptor array for matching, since Vulkan doesn't implement local descriptor yet, I need this to fetch data

// access via InstanceID()[0] first, the data will be filled by the systtem on C++ side

// max number of data member: 128 scalars for now

struct MaterialData

{

uint Data[128];

};

StructuredBuffer<MaterialData> UHMaterialDataTable[] : register(t0, space6);

// get material input, the simple version that has opacity only

UHMaterialInputs GetMaterialInputSimple(float2 UV0, float MipLevel, out float Cutoff)

{

MaterialData MatData = UHMaterialDataTable[InstanceID()][0];

Cutoff = asfloat(MatData.Data[0]);

// material input code will be generated in C++ side

float Node_1350 = asfloat(MatData.Data[18]);

float Node_1347 = asfloat(MatData.Data[17]);

float Node_1373 = asfloat(MatData.Data[16]);

float Node_1315 = asfloat(MatData.Data[15]);

float3 Node_1318 = float3(asfloat(MatData.Data[12]), asfloat(MatData.Data[13]), asfloat(MatData.Data[14]));

float3 Node_55381 = float3(asfloat(MatData.Data[9]), asfloat(MatData.Data[10]), asfloat(MatData.Data[11]));

float4 Result_1363 = UHTextureTable[MatData.Data[7]].SampleLevel(UHSamplerTable[MatData.Data[8]], UV0, MipLevel);

float4 Result_1357 = UHTextureTable[MatData.Data[5]].SampleLevel(UHSamplerTable[MatData.Data[6]], UV0, MipLevel);

float4 Result_1341 = UHTextureTable[MatData.Data[3]].SampleLevel(UHSamplerTable[MatData.Data[4]], UV0, MipLevel);

Result_1341.xyz = DecodeNormal(Result_1341.xyz, true);

float4 Result_1331 = UHTextureTable[MatData.Data[1]].SampleLevel(UHSamplerTable[MatData.Data[2]], UV0, MipLevel);

UHMaterialInputs Input = (UHMaterialInputs)0;

Input.Opacity = 1.0f;

return Input;

return (UHMaterialInputs)0;

}I did try to generate the data member of struct MaterialData on C++ side at the beginning. But it ends up giving me GPU Tdr issue. Despite it's possible to set up different size of structured buffer in the descriptor array.

The issue should be on the GPU side. It just doesn't work. So I hardcode the MaterialData member as an uint array for now. At least it can carry up to 512 bytes parameters.

By far, I managed to apply compilation-free parameter nodes to RT hit group too!

Summary

It has already been 1 year since I created the project. I don't work on it 24/7 and use only my available time so I'm doing it slowly.

Despite it's tougher than just using UE/Unity. But the gain is also worthy and help to establish the skill set. I'm not regretting it at all.

For the next year, I wish I could at least finish the particle system and skeletal mesh/animation stuffs, so does the other runtime rendering features 🙂

It's also time to find a better GUI solution.

— End —