Hi,

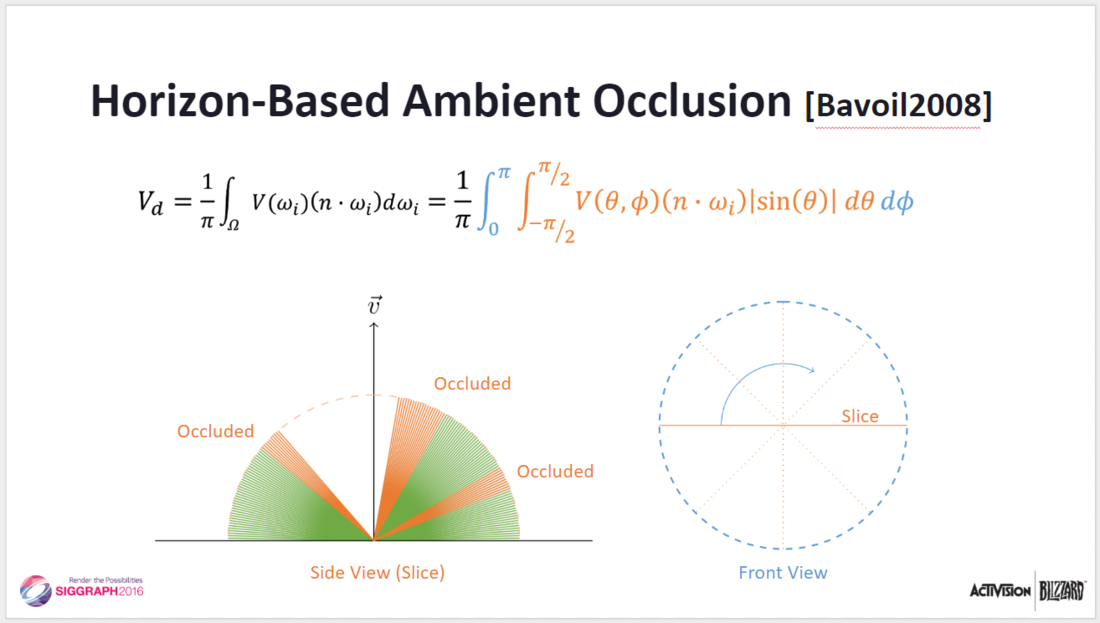

I am currently implementing the ambient occlusion technique described in the paper & presentation "Practical Realtime Strategies for Accurate Indirect Occlusion" (aka Ground-Truth Ambient Occlusion)

The results so far are pretty good, I have found one edge case though where I think the technique breaks down and I'm wondering if there is a solution that can solve the problem or if there is no good way to solve this with depth-buffers alone.

The problem occurs with thin elongated objects, they cast way too much occlusion especially when viewed from a flat angle.

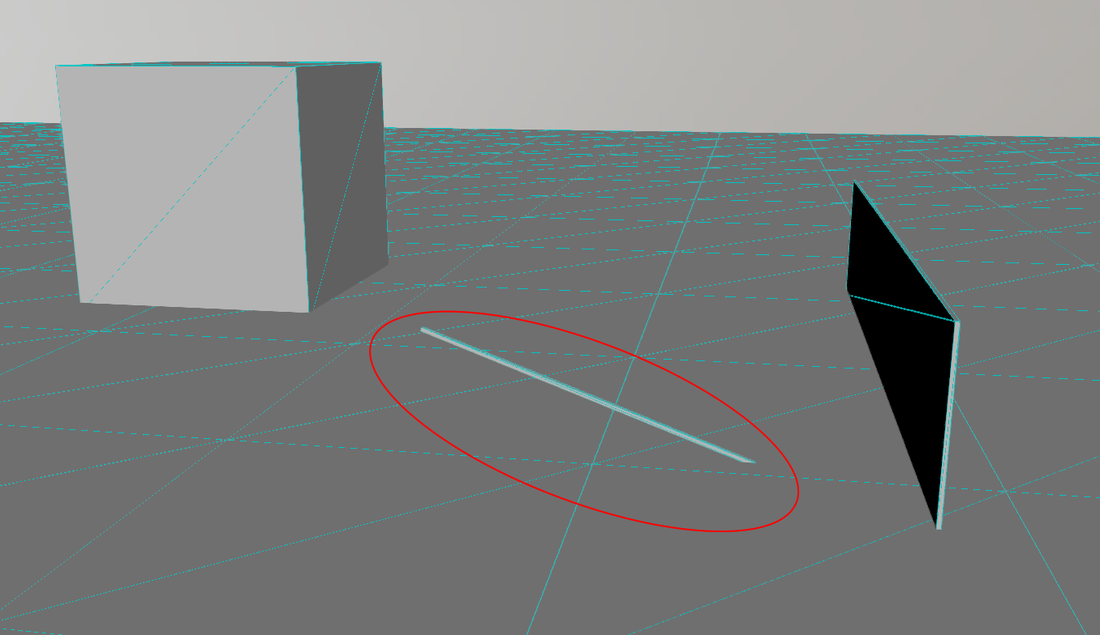

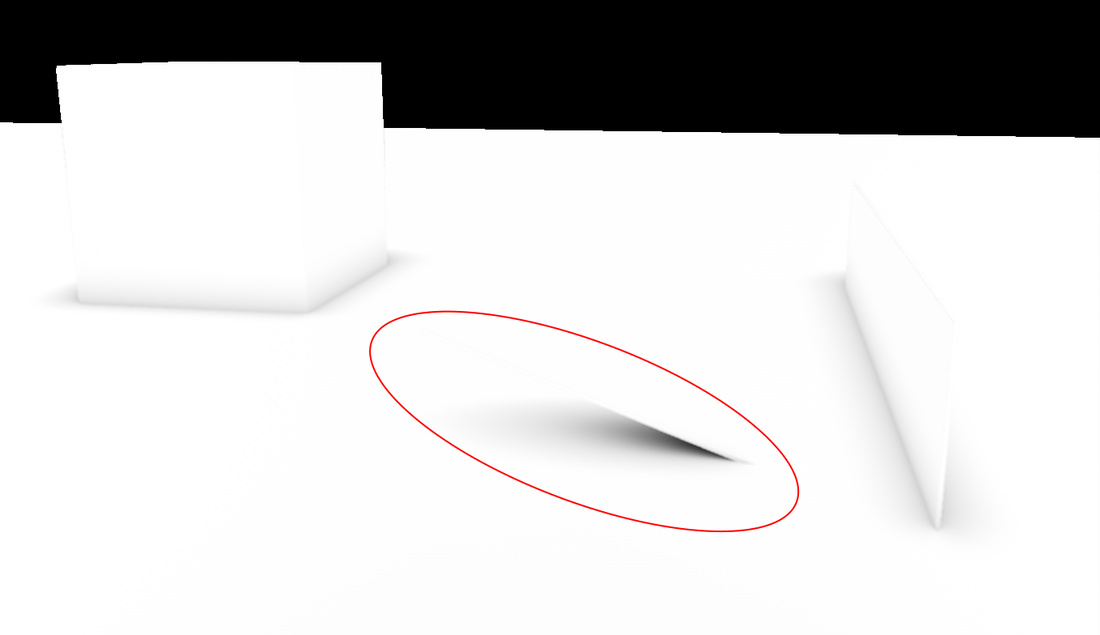

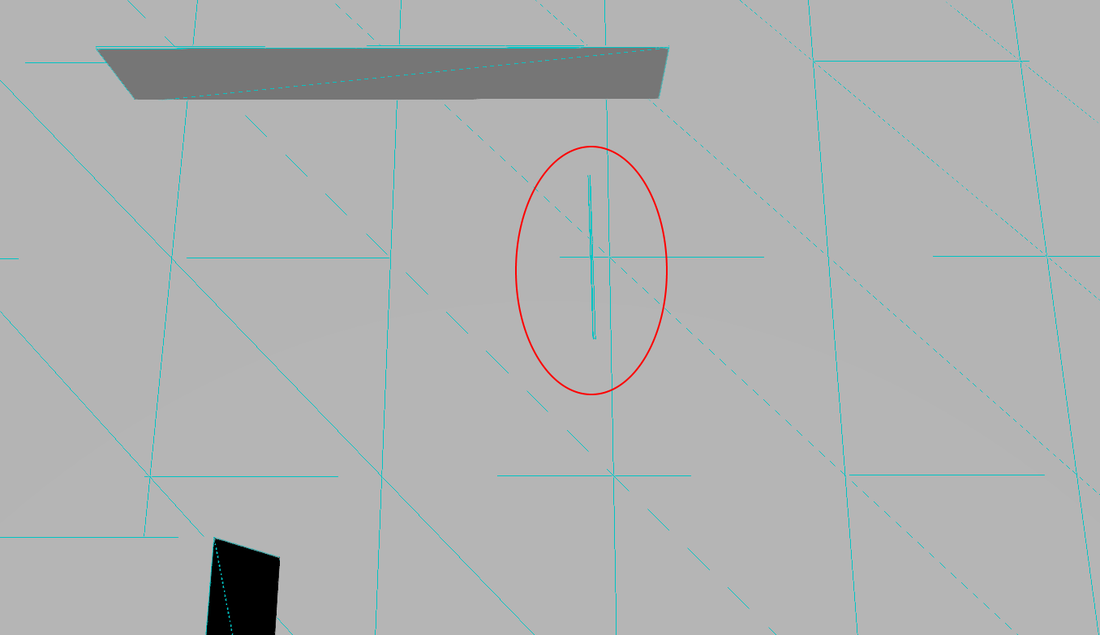

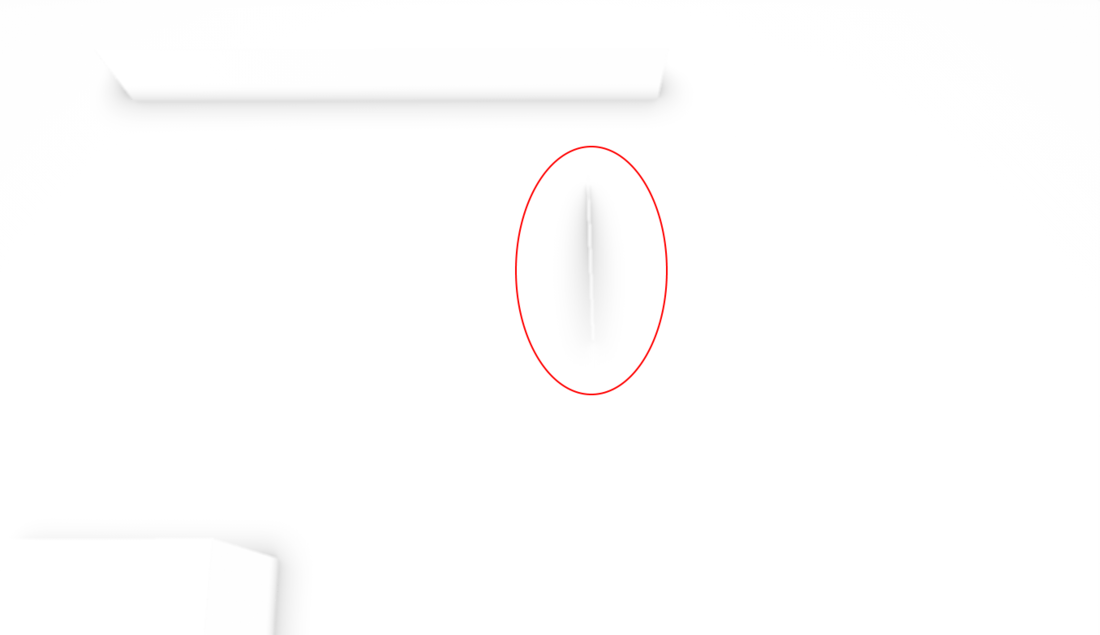

In the below images I put a thin pole at a ~20° angle that sticks into the floor plane, for reference there also is a big cube that touches the ground plane, as well as a very thin wall (with similar thickness as the pole)

In the first two images you can see that there is too strong of a occlusion where the pole comes close to the ground plane when viewed from a flat angle.

When viewed from above (the second pair of images) the occlusion looks more reasonable (much weaker)

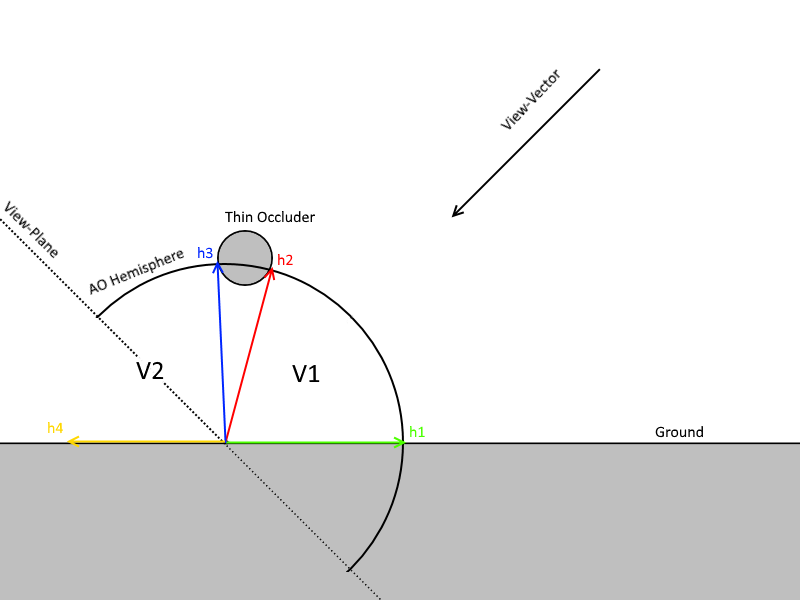

As far as I understand it, the problem is caused because the HBAO/GTAO algorithm searches for the horizon angle, but it has no knowledge of discontinuities in the depth-buffer. Therefore in the below case for the fragments of the floor that are below (in Y) AND behind (in Z) of fragments of the thin pole object, will find the steepest horizon angle on the first fragment that lies on the pole object. Therefore the visible part of the hemisphere [V1] is then only defined by the horizon angle that was found on the ground plane [h1], and the second angle points towards the front-most fragment of the pole-object [h2].

That means that the total determined visibility (disocclusion) over the hemisphere is missing the entire visible back half [V2] ... spanning between the floor behind the pole [h4] and the back-tangent horizon onto the pole [h3].

At least that's what I think is the cause for the problem. Is my thinking correct so far ? and if yes, are there ways to fix this issue with the GTAO/HBAO approach ?

I already tried out some ideas, that I have come up with, utilizing a back-face Z-Buffer and full on depth-peeling with multiple-layers, but nothing so far has solved the problem without introducing problems for the regular AO cases.

Any hints would be much appreciated, Thanks.

Scematic

Side-View RGB

Side-View AO (the thin pole casts way too much occlusion onto the ground plane)

Top-View RGB

Top-View AO (from the top perspective the problem is not noticable as much)