JoeJ said:

We would end up at discussing ‘can we achieve AGI?’, ‘do you believe we will?’, etc.

I changed my mind a bit about AGI. It is a loose term. It depends from one person to another.

If you are businessman and your harvesting machines can go to the field, harvest it and gets back without a human, the businessman would say - “see? it is more intelligent than the human workers i fired. They were always getting drunk and were falling asleep in the field.”

Who needs AGI, who cares about AGI? The important question is - “Does the robot win over a human in a fair competition?” Because if the robot outperforms the human in all of the aspects of the job, it is the most fair thing the robot to take the job of the human.

JoeJ said:

Machines can be summed up without a loss in computational power.

We have two teams working on a cure.

Team A is made up of 10 researches of IQ 140.

Team B is made up of one single researches of IQ 200.

Can team A do a better job than team B?

I don't think 100 idiots thinking together can outsmart a single genius.

JoeJ said:

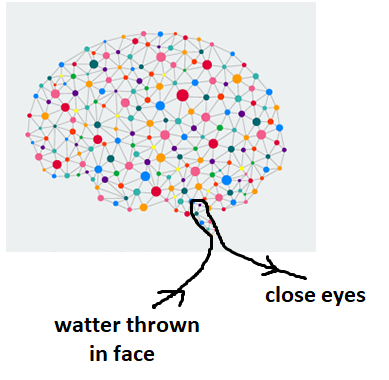

‘Can we increase human intelligence by combining multiple humans?’ I think it's a yes due to interaction and communication, likely the main reason we became so smart.

I am not sure here either. If you finish faster, you are smarter. Take as example an exam in school, they give you 45 minutes to finish. Saying that - “My time ran out, i would have solved it if i had 5 minutes more” will not save you from a penalization. Because the nerd in the class actually finished in 45 minutes. This means the nerd is (most probably)smarter than you. Because he finished faster. And even an idiot would solve a very complicated puzzle given enough time to try all the possibilities.

Then the brute force of a computer can finish earlier than doing analytic search for the result.

You could argue that it is not fair to use brute force to get a result faster. But consider this -

Two robots solve a puzzle. One finishes faster than the other. He gets applauded. And gets a prize. Then after two weeks somebody rings on the door of the winning robot ant the robot opens the door and the person outside tells him - “Mr. Robot, we revised your power consumption during the test with the puzzle and we discovered that you was bruteforcing. Therefore we lament to communicate to you that we disqualify you and you have to return the price”

Does it really matter? Bruteforce or logical analysis? If you have an army of logical robots and the enemy has an army of bruteforcing robots, and they destroy you army by using better strategy in war, what matters more? Honors for having used logic or your head rolling for having lost the war?

It is all very philosophical.

Again - “If it acts like if it is smart, it is smart”

“if looks intelligent, it is intelligent”

“Not fair - he has a bigger head than mine, more brain inside, this is why he outsmarted me” - nobody will give a f. You was outsmarted by a gianthead. Stop whining!

If it is better than you at a job and replaced you for that job, it does not matter if it has an official certificate “AGI-approved” glued on its forehead or not. You starve it eats. Evolution tells you you are weaker, gonna disappear soon as a failure.

Anime quote -

A deity - “I can not believe that a simple human defeated me - the deity” and dissappears. (typical anime) Just as an example.

therefore -

An AGI robot - “I can not believe i was outsmarted by a bruteforcer” and dissappears.

Who cares about AGI?!?!? It is only words. If it has a database with all the possible answers to all the question you could possibly ask it, it can act intelligently. This is what matters. the rest is RACISM - “anallyzer vs bruteforcer vs databaser”

Is connecting wires to live biological neurons fair? It is not fair. It is not pure software based solution. It is not fair. But it will take your job, you starve to death.

I have to clear it again - only in case of a fair competition. If a robot steals your job because it robot sleeps with the boss, it is not valid.

JoeJ said:

Before that, my question ‘can a creature build a machine that is smarter than the creature?’ got a no, because a yes sounds too much like a perpetuum mobile, violating laws of nature.

It often happens a very smart child to be born from two alcoholic parents. The child has then more IQ than both of its parent summed together. It just that the child evolved in another path. Different than the parents.

This is a proof that you can create an evolutionary algorithm that could evolve more than you evolved and outsmart you.

JoeJ said:

So i became a bit more willing to believe your SciFi visions about the future, maybe.

It depends of you. Some researcher could create a robot race that colonizes the universe and you still could be complaining - "But it is just code/artificial neurons inside. But it has not a soul. But it can not die if you kill it" complain complain complain

Or you could open your mind a little bit to the idea. Only a little bit, because if you want too much to believe you could fall in some of the cults of some tech CEO. I htink you should open a bit more than your current state.

Reconsider again - "if it acts like a thing, it is that thing". And you will have to apply to this your own tastes - how much it has to repeat a human? Is it enough to talk to you, or you want it to touch you too? Do you need it to fart as a human or you could skip over this? It depends of your personal taste from here on.

In tat Series Devs they repeated a human perfectly atom by atom. Repeating even the state of the quantum particles. they were considering these clones not only alive, but equivalent to the original. It depends of you, but you are too pessimistic about it.

Hormones often impede humans to think clearly. Computer do not suffer from this. Is this fair? This is a huge advantage for computers. What about backups? A computer can have backups and replicate, is this a fair thing?

I will make you sit on a table in front of a computer and you two will have to solve a puzzle. Then you two will start. The computer will connect to the cloud, will generate 10bln random forests and get the answer in 2 seconds.

Then you would be like - “I complain! It is not fair. It used the cloud, bruteforce and made 10bln simulations in 5 seconds. I can not do that. the more i can simulate is one scenario at a time. Not fair. Not fair, it has advantage over me.”

Gasparov reaction when a computer beat him at his own game -

time in the link - look at his face. read his feeling. Does it really matter if it used SIMD or not inside? It beat a human.

https://youtu.be/3EQA679DFRg?t=664

JoeJ said:

I have one question for you: Do you want a independent species of intelligent machines? Or do you want them to obey your orders? NikiTo said: I am tired of repeating - if it acts as if it is alive, it is alive. The rest is religion. if (input == “do you love me?”) { output = “Yes! Yes yes yes!!!”; } Thanks for the answer. Ethically, that's not correct, NikiTo! (Beside my doubts it would work for you to betray yourself so easily.)

Everybody betrays himself one way or another in a different scales.

You can not prove to me you would not become the worst dictator in the history of the universe if you had the power. You can not prove it, because you was never tempted with such a power. You could say “i would be good, i would donate half my money to charity, then half my blood to sick pigeons” but this is all words. You can not prove it. You are not given the chance to prove it. And i would vote to not give you that power, just in case. Only i deserve that power. Only i can handle it. - is that the way you think about yourself? Do you think you would be a better billionaire than the ones we have now? You and me, we can only bla bla bla about morals, because at the moment of the truth, not even you know how would you react. Not even you know yourself enough. It is easy to claim - “i never stolen not a single cent" when you never worked in politics. then i give you a job in politics, you are given the opportunity to steal and surprise surprise you steal more than everybody before you. Not even you know it.

Churches are full of sinners who pretend to be saints. Politics are full of robbers who pretend to never steal.

Your moral-talk has no effect on me. You did not die on a cross to pardon the sins of your enemies. You has not proven to have morals in a practical way. Only words. and words are easy.

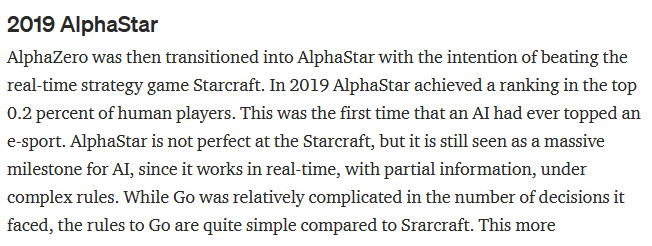

About AGI again - natural language is the last frontier for AI. It is curious how what looks the simplest task - chat bot is the hardest thing to do. It is even mythological and religious -

“”"""The oldest stories of golems date to early Judaism. … Adam was initially created as a golem … Like Adam, all golems are created from mud by those close to divinity, but no … golem is fully human. Early on, the main disability of the golem was its inability to speak. … Rava created a man. He sent the man to Rav Zeira. Rav Zeira spoke to him, but he did not answer. Rav Zeira said, "You were created by the sages; return to your dust". """""

It is not AGI if it can not speak. You can use it to define AGI - being able to fluidly use natural language. If it can not speak, it is worthless. From a religious book that dates much much before AI.

(Clarification - I know what AGI officially means, but what i want to say when i use “AGI” here is the thing you are after - the ultimate goal, the holly grail of AI. When you say "AGI", you mean the last task to solve in AI. The ultimate goal. After that goal is reached, it is only learning and becoming smarter and smarter. That same “AGI”. I know you write the acronym “AGI” but i you mean something loose.)

Resuming it - you need to relax a bit your expectation of intelligence. It is all very loose and philosophical, not well defined but already we can work with the loose term. We already can work with unclear terms and goals. If we relax a bit. Lower the guard a bit. If an AI tells you a very good joke, would you laugh? Or you will hold your mouth with your hand forcing you to not laugh, because it has not a soul. Ok, it has not a soul. I agree, but it can act as if it has a soul. NNs can fake complicated and sophisticated moods, flavors. Ofc it has not a soul. But you still could enjoy the music it makes or the paintings it creates. You still can use it to deliver you pizza. After all, it is easier to define intelligence and conscience than defining a soul. Leave that “it has not a soul” behind a bit.