Hi fellas,

Good day!

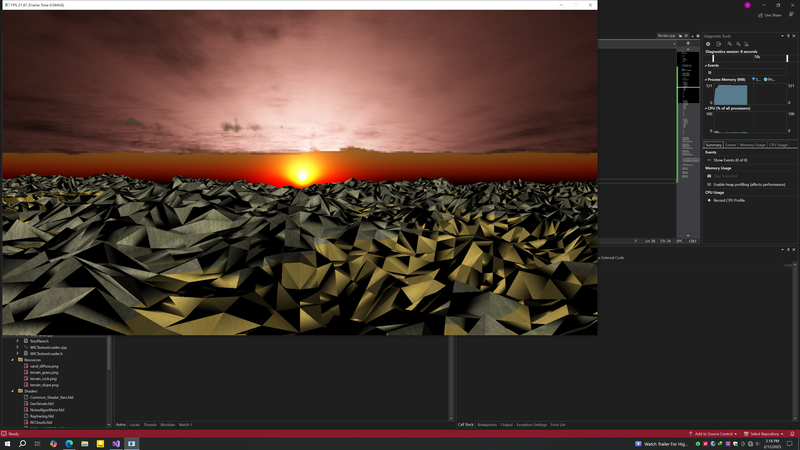

I'm trying to generate a terrain dynamically using perlin noise. In order to do so, I have created a plane and fed into tessellation stages to be tessellated and retrieved the the output via streamout and fed into compute shader to generate height field and fed back into dxr to be raytraced. The problem is the shading, I use diffuse lighting for a start. This dxr setup had worked before(without using tessellation just feed raw geometry directly to dxr) I utilized the rasterization pipeline to facilitate my desired result(generating terrain dynamically). After all these things, I've got everything I needed to shade in dxr, the problem is normals, they seem to have a problem. Any thoughts on to resolve this?

#define HLSL

#include "NoiseAlgorithms.hlsl"

struct RE_VERTEX

{

float3 Position;

float2 TextureCoordinate;

float3 Normal;

};

StructuredBuffer<RE_VERTEX> inVertices : register(t0);

RWStructuredBuffer<RE_VERTEX> outVertices : register(u0);

[numthreads(1, 1, 1)]

void ComputeTerrain(uint3 DTID : SV_DispatchThreadID)

{

RE_VERTEX inVertex = inVertices[DTID.x];

RE_VERTEX inVertex2 = inVertices[DTID.x + 1];

RE_VERTEX inVertex3 = inVertices[DTID.x + 2];

uint seed = 100000;

uint3 txDim = uint3(1024, 150, 1024);

float perlinY = Perlin3D(

float(inVertex.Position.x),

float(inVertex.Position.y),

float(inVertex.Position.z),

float(txDim.x),

float(txDim.y),

float(txDim.z),

8.0f,

0.5f,

4.0f,

true,

seed + 50

);

float perlinY2 = Perlin3D(

float(inVertex2.Position.x),

float(inVertex2.Position.y),

float(inVertex2.Position.z),

float(txDim.x),

float(txDim.y),

float(txDim.z),

8.0f,

0.5f,

4.0f,

true,

seed + 50

);

float perlinY3 = Perlin3D(

float(inVertex3.Position.x),

float(inVertex3.Position.y),

float(inVertex3.Position.z),

float(txDim.x),

float(txDim.y),

float(txDim.z),

8.0f,

0.5f,

4.0f,

true,

seed + 50

);

RE_VERTEX outVertex = (RE_VERTEX) 0;

outVertex.Position = inVertex.Position;

outVertex.Position.y = perlinY * 150.0f;

outVertex.TextureCoordinate = inVertex.TextureCoordinate;

RE_VERTEX outVertex2 = (RE_VERTEX) 0;

outVertex2.Position = inVertex2.Position;

outVertex2.Position.y = perlinY2 * 150.0f;

outVertex2.TextureCoordinate = inVertex2.TextureCoordinate;

RE_VERTEX outVertex3 = (RE_VERTEX) 0;

outVertex3.Position = inVertex3.Position;

outVertex3.Position.y = perlinY3 * 150.0f;

outVertex3.TextureCoordinate = inVertex3.TextureCoordinate;

float3 normal1 = normalize(cross(outVertex2.Position - outVertex.Position, outVertex3.Position - outVertex.Position));

float3 normal2 = normalize(cross(outVertex3.Position - outVertex2.Position, outVertex2.Position - outVertex.Position));

float3 normal3 = normalize(cross(outVertex.Position - outVertex3.Position, outVertex2.Position - outVertex3.Position));

// Calculate avg normals

float3 avgNormal = normalize((normal1 + normal2 + normal3) / 3);

outVertex.Normal = avgNormal;

outVertices[DTID.x] = outVertex;

outVertex2.Normal = avgNormal;

outVertices[DTID.x+1] = outVertex2;

outVertex3.Normal = avgNormal;

outVertices[DTID.x+2] = outVertex3;

}