Software renderers in the 90s didn't have or need SIMD. What they did have is:

- Very low resolutions.

- Very simple lighting model or no lighting model.

- 8 bit indexed color.

- In many cases, specialized rasterizers for floors and walls instead of or in addition to arbitrary polygon rasterizers.

- Very skilled programmers who specialized in optimizing software renderers.

Very true, I like to add to that that also things were done different in the 80s and 90s.

For example the C64 had hardware sprites. There were also special hardware registers for smooth scrolling, and sometimes there were hardware layers, allowing for more tricks.

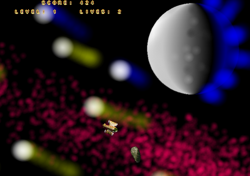

Amiga had a hardware blitter, great for moving 2d blocks of pixels around, without the CPU breaking a sweat.

But in those days, still alot of tricks were used to keep up with the 50 FPS. Since the CPU was only a few MHZ. (not GHZ)

Nowadays, the hardware is super capable, but not really targeted for making 2D 50FPS games. GPUs are mainly there for helping 3D rendering. (Of course you can “abuse” the GPU, and let it do other things, like training neural networks, or calculating 2d graphics)