Hello, I'm developing a 3D engine by my own. I've studied and adopted techniques like shadow mapping and deferred shading. Although the results were looking good, I wasn't satisfied at all because it felt somehow non-universal (rendering scene X times from different views, distance-dependent shadow-map dimensions, postprocessing of shadow aliases, …).

With gained experiences I started doing it "my way". I've created a three dimensional grid of small cells. From any position the related cell can be derived. Content of a cell is a list of vertex indices (polygons which are related to that respective cell). Grid table, vertex indices and vertices are bound as separate structured buffers to the pixel shader. Until yesterday I was quite confident that shadowing could be done by checking if the current pixel is hidden from light source by any polygon in between. Therefor I wanted to follow the light ray from target to source on a per-cell basis (ray-tracing like), but I had to stop even at the first cell. The frame rate drop was disastrous.

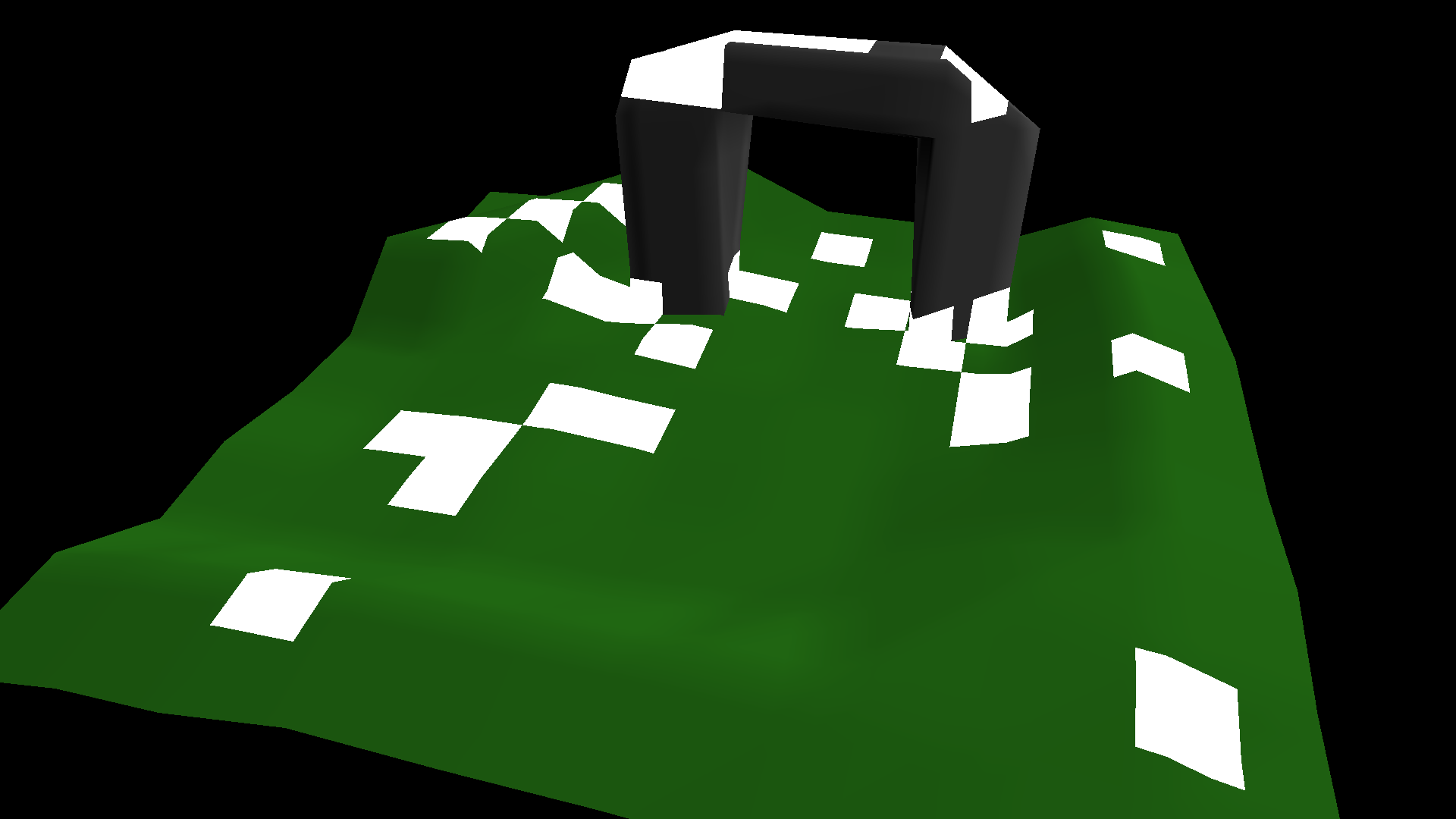

Before fetching all neighbor polygons (first grid, then indices and finally vertices) I had frame rates around 1200/500 (extrapolated max/min within one second) and afterwards around 150/60. For the whole scene there are only 21 draw calls - draw units are a multiple of mentioned cells. Alltogether there are only 1991 vertices, whereby some are even copied to ensure locality within memory. Most cells contain around 10 polygons, all with more than 15 polygons are colored white (no cell has more than 25 polygons). Vertex size is 32 bytes. GPU output is 4k. DirectX11 is used.

4 + 10 * 3 * 4 + 10 * 3 * 32 = 1084 (bytes read per pixel)

I knew that described approach would be challenging. Wanted actually to go several meters towards the sun (instead of only 0.5m) and also to all close lights. My GTX 650 TI with 2GB ram is quite old, but that outcome suprised me hard. CPU collision detection, dynamic objects in the scene, more mesh details... are even missing. Not sure, if even modern GPUs or ones in 10-20years would be able to handle so many dependent memory reads I'm planning. According to replies in https://computergraphics.stackexchange.com/questions/10685/fastest-way-to-read-only-from-buffer going that way is actually no good idea.

Thought already about smaller cells, but then the grid would need to get much larger (finally 16GB+ for 500m terrains) while the polygons would need to get smaller to still fit into the cells -> more polygons and finally same amount of checks. But in case of shadow mapping with volumetric lights and ray marching I also would have horrible much texture accesses per pixel (for each marching step against every light source)…

I don't want to believe that my approach is complete garbage. Do you have any advice for me? I've read about similar ways to implement ray tracing, so I can't be totally wrong, or?