JoeJ said:

pssst… keep silent about that. People are meant to work half a year so they can afford 600W GPUs to get the same thing, but with a shiny green badge on the lower right! :P

What green badge and RTX dependency? Laughs in custom real time path tracer!

Sorry, I had to!

Propagation Techniques

As much as they sound good, their propagation speed is a major problem. They tend to be somewhat good for light sources that are either static or very slowly moving. Also you should not turn them on/off. Possibly also for subtle lights. Therefore they are wrong choice for illumination of interiors, and anywhere where there are dynamic lights in general. At the point where propagation technique is applicable - using progressive techniques (or even precomputation) might be better choice. Why? They have same problem with highly dynamic scenes, but in general yield much better quality of resulting lighting (and yes, possibly even without voxelization or light bleeding problems - personal opinion, even dynamic light mapping beat my attempt to LPV like approach in terms of quality and performance).

Light Bleeding

Is a nightmare of voxel based techniques. There are approaches for this (like F.e. directional data in voxels, or yes - denser voxel data, that always works at cost of much higher memory usage).

Level-of-Detail differences

I did hide them somewhat successfully with multiple cascades, or at least to the level I though was visually acceptable. Comparing to my real time path tracer (which yields superior quality, but at the cost of noise), I'm still somewhat satisfied with vxgi - it offers SMOOTH global illumination. The noise in my opinion (in path tracing) tends to be way too distracting for the player.

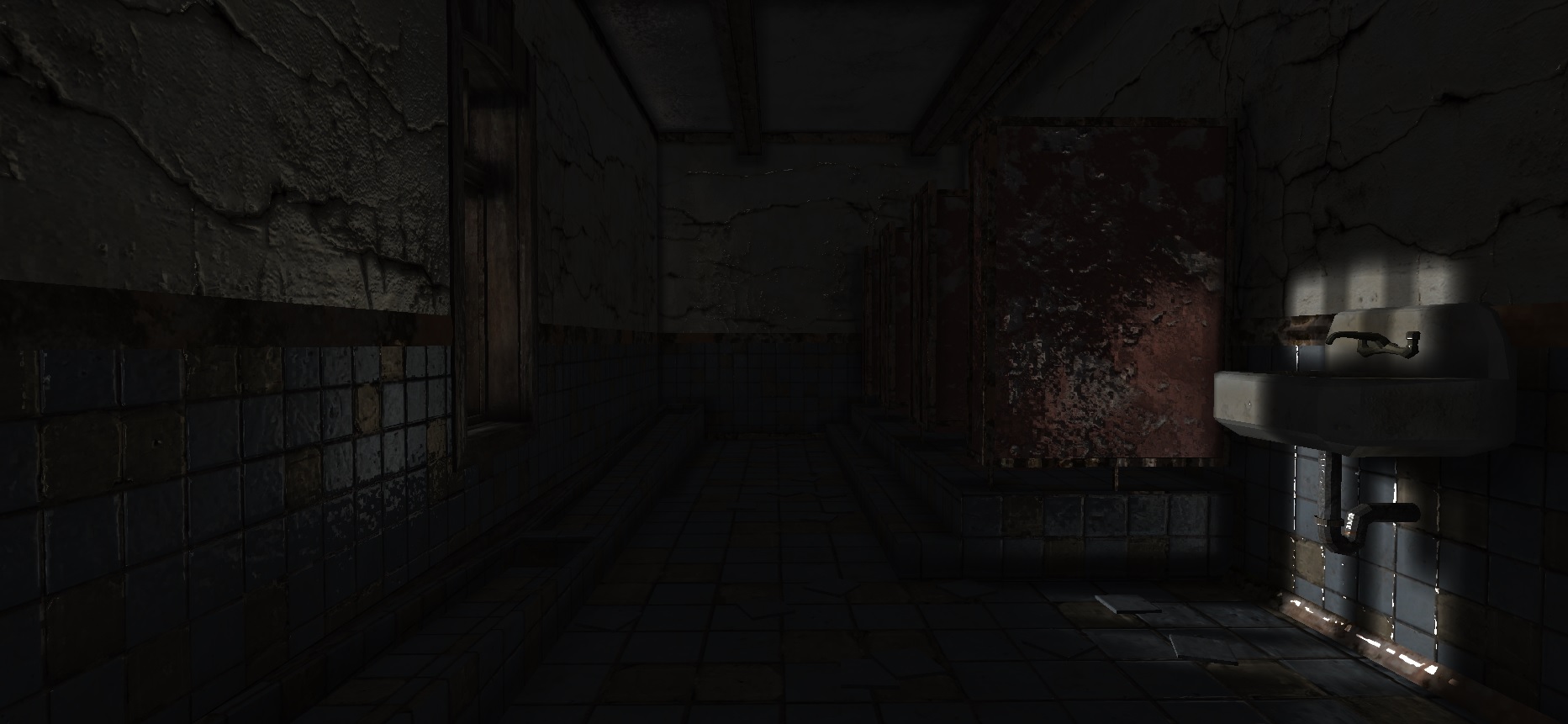

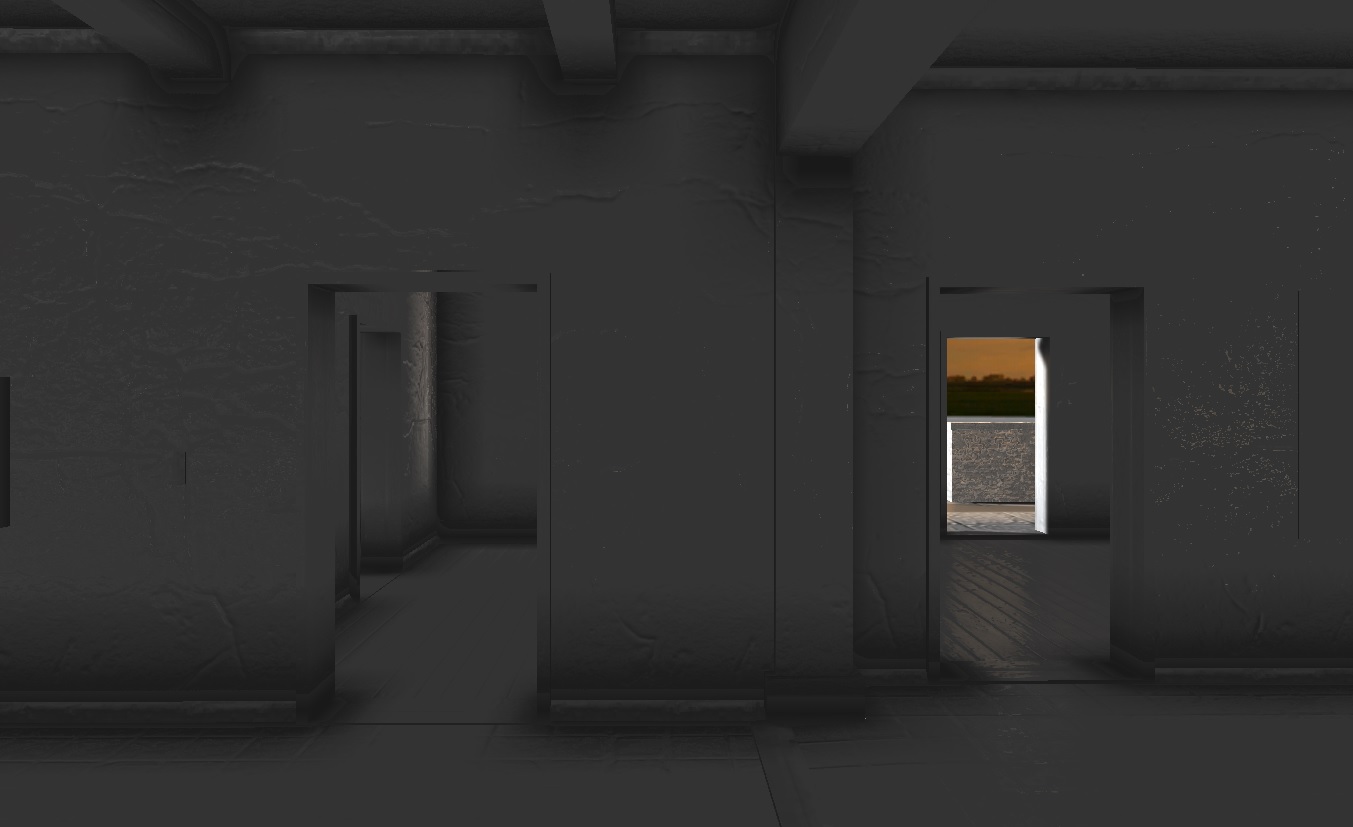

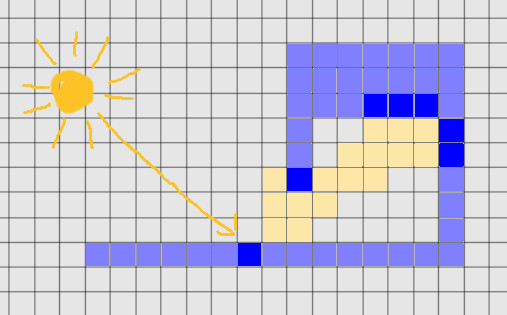

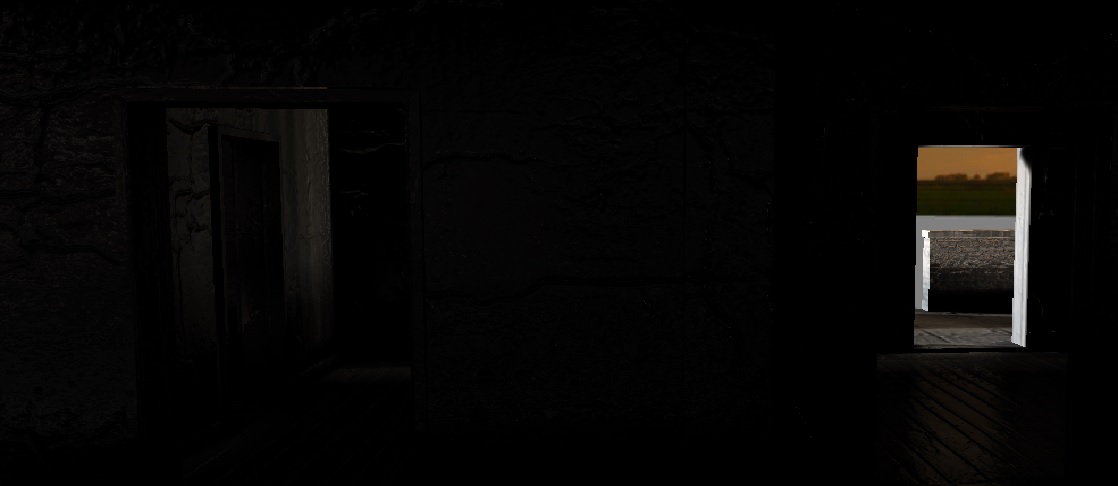

Side note: One of the proposals I did in my current game project and tested it in (which will probably never get finished, as it is my playground) was to completely separate interior cells from exterior cells, much like you can see in The Elder Scrolls series. Exterior cells would then possibly use either cascaded vxgi or completely different approach!… while interior would use hand placed grid(s) by artist (vxgi tends to beat other solutions in terms of quality and mainly smoothness for interiors). This has huge advantage of directly seeing results as in game within editor - so anyone creating/editing current area can specify whether grid needs to be sparser/denser, etc. This does also solve major problem of light bleeding between exterior and interior!

There are few major disadvantages though - first of all definition of exteriors and light coming from exterior to interior (I would need a proper clever way to do this). Second, and that's much harder to cope with and unrelated to GI itself, is introducing loading screen between interior and exterior (and all impacts of that - for gameplay, AI, time of day, etc.).