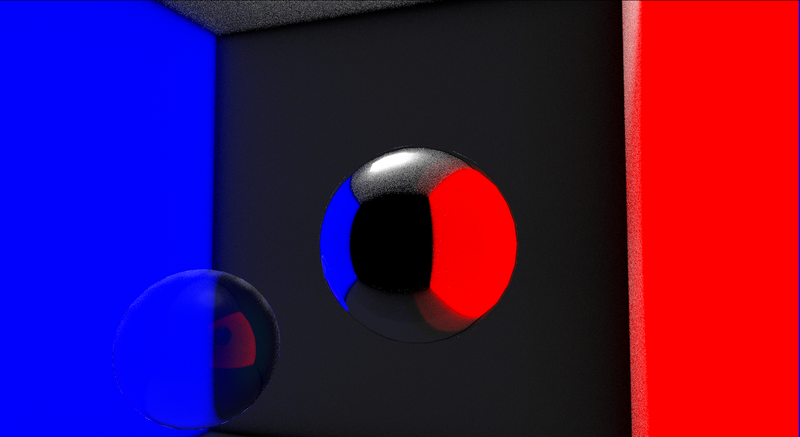

I've been playing around with the Vulkan ray tracing. Here's an example render:

Now, I want better lighting and shadows, so I'm going to try path tracing.

Does anyone have any of their own path tracing code in GLSL?

I've been playing around with the Vulkan ray tracing. Here's an example render:

Now, I want better lighting and shadows, so I'm going to try path tracing.

Does anyone have any of their own path tracing code in GLSL?

I found this project that may be useful: https://github.com/knightcrawler25/GLSL-PathTracer

Admin for GameDev.net.

I can share a ShaderToy example - which is GLSL specific https://www.shadertoy.com/view/XdXyRS - it was used as a basis of larger software in which I contributed - but I can't disclose.

Other than that - it's mostly OpenCL and CUDA in world of ray tracing (unless you use new DirectX Ray Tracing or Vulkan RT - where you can find some GLSL or HLSL examples).

I do have another small game GRDOOM, released here for one competition (another compo could bring a bit of fresh wind for GameDev.net - but I'm not sure how much @khawk and others are busy in the real life), which ran on somewhat full featured OpenCL ray tracer (and implemented path tracing in it). It is not GLSL though, but OpenCL - https://www.gamedev.net/projects/835-doom/ - I can provide some sources for it if you want.

Other than that - I do have multiple path tracers which either were used in past or which we use now, sadly I can't provide full source code for any without talking to other parties (could release some fragments) … yet those are almost exclusively in either CUDA, HLSL or good old C++ (CPU only for that one).

Let me know if it helps a bit, also feel free to spam questions if you have any.

My current blog on programming, linux and stuff - http://gameprogrammerdiary.blogspot.com

Vilem Otte said:

I do have another small game GRDOOM

Curious, have you ever tried running that on a mobile platform? Not expecting anything groundbreaking, but I'm curious what the performance would be like (i.e. Adreno GPU).

Vilem Otte said:

another compo could bring a bit of fresh wind for GameDev.net - but I'm not sure how much @khawk and others are busy in the real life

I know. 🙂 I'll think about this.

Admin for GameDev.net.

khawk said:

Curious, have you ever tried running that on a mobile platform? Not expecting anything groundbreaking, but I'm curious what the performance would be like (i.e. Adreno GPU).

Sadly I haven't. I've used SFML for display - but the logic, not sure how much of that is portable to mobile platforms … but the whole rendering library (including acceleration structure, ray generation, etc. etc.) is in C/C++ and uses OpenCL. It might be possible to build and run it with a bit of effort. Therefore, as of now - no idea how efficient would that be on Ardeno GPU.

Definitely easier to what I do in D3D12 now.

khawk said:

I know. 🙂 I'll think about this.

Glad to hear that. If there is any way I could assist (although I don't really have much experience running such events, only participating in them) - let me know - I'd gladly help.

My current blog on programming, linux and stuff - http://gameprogrammerdiary.blogspot.com

taby said:

It’s coming along, sort of.

There is something wrong though - left wall should be bright blue and right bright red. Unless you're trying to ignore primary ray results and only gather together secondary light results

My current blog on programming, linux and stuff - http://gameprogrammerdiary.blogspot.com

I've got something working. What it is is not a final implementation. It's a bit dark.

Any suggestions?

The relevant code in the raygen shader is:

float trace_path(const int iterations, const vec3 origin, const vec3 direction, out vec3 hitPos, const int channel)

{

float ret_colour = 0;

vec3 o = origin;

vec3 d = direction;

const int samples = 10;

for(int s = 0; s < samples; s++)

{

float local_colour = 1;

for(int i = 0; i < iterations; i++)

{

float tmin = 0.001;

float tmax = 1000.0;

traceRayEXT(topLevelAS, gl_RayFlagsOpaqueEXT, 0xff, 0, 0, 0, o.xyz, tmin, d.xyz, tmax, 0);

if(channel == red_channel)

local_colour *= rayPayload.color.r;

else if(channel == green_channel)

local_colour *= rayPayload.color.g;

else

local_colour *= rayPayload.color.b;

if(rayPayload.color.r == 1.0 && rayPayload.color.g == 1.0 && rayPayload.color.b == 1.0)

{

ret_colour = 1;

return ret_colour;

}

if(rayPayload.distance == -1)

{

ret_colour += local_colour;

return ret_colour;

}

hitPos = o + d * rayPayload.distance;

o = hitPos + direction*0.01;

vec3 rdir = RandomUnitVector(prng_state);

// Stick to the correct hemisphere

if(dot(rdir, normalize(rayPayload.normal)) < 0.0)

rdir = -rdir;

d = rdir;

}

o = origin;

d = direction;

ret_colour += local_colour;

}

return sqrt(ret_colour);// samples;

}The full source is at:

https://github.com/sjhalayka/cornell_box_textured

From a brief reading of the shader:

o = hitPos + direction*0.01;? You are biasing next ray origin using the first ray's direction. This doesn't make sense. You already can avoid ray-surface self intersections using the tmin value. If you must manually bias, use the normal vector direction instead.