I'm not part of the trend toward retro. The super bowl for example had a “Tron” theme and roller skates. It's like an appreciation for the proverbial “good taste” in those old things.

But in my case I lived in a time when Shinobi by Sega was around when I was 9. I had no idea what an arcade was. Seemed like I lived under a rock. I played pac man at the convenience store back then. Sometimes pole position in a store where the clerk later gets shot. When I ate out with family at ‘the loop’ I occasionally played the legend of kage.

I always was enamored by the pallette and the painted quality of games like Shinobi and the Legend of Kage.

So these days one could be fascinated by the pallette and painted qualities of those old games and then render them with modern conveniences much more easily - or so you say and I agree I've often known those images are easily made with a paint program like krita.

But where we get to the hardware yes I am interested in the old ASICs, VLSI, and the idea of hardware acceleration - those were the hallmarks of my time.

Now we still have ASICs really good ones and I am wondering how the old ones worked. You get a white paper on some 1990s ASIC and also look through books concerning hardware from that time.

Hardware accelerators exist and with GPUs that have special multi core architecture I hear CUDA is like assembly code -which I like and would prefer over using Direct X. But versus an engine I do like the idea of direct x programming. I like how direct x is just rendering and not all the stuff in the game engine.

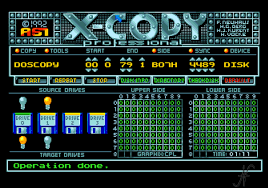

My first computer was an 80s machine, the c64 but the 16 bit CPUs like the 68000 in the Amiga and the Intel 80286 in the PC were just going into obsolescence as the 80386 was the bargain machine as it was more than 5 years old. My second computer was an 80386-SX 16 MHz running dos 5.0. I played commander keen and Hugo's house of horrors. I was always wondering how the games were made but didn't get an idea until about 8 years later in my 20's.

But a lot of stuff happened from 20 to 40 and I didn't decide to try again until recently. I did go to school around age 30 for electrical construction. Some of the classes I took were in advanced math, programming, and electronics.