MagnusWootton said:

Yeh, about that… if I make the light too small none of the rays hit it!

Usually you handle this with a ‘shadow ray’ or ‘next event estimation’ in path tracing, iirc.

Can be as simple as: Pick one (or N) random light (you only have one), trace a ray from the shading point to the light, and if visible calculate it's reflection.

In path tracing you usually do this at every vertex of the path. Even it doubles the ray count, it's much faster in the end because we need less samples to get the same estimate.

MagnusWootton said:

I could add a sky to it, but I'm digging the dead black at the moment, and its helping me debug the scattering only having a basic sun type light I can vary the radius of.

For that you can always turn the sky off. But currently you can't turn it on, so your impressions about the materials reflections are a very shallow view.

MagnusWootton said:

I'm having trouble with the distribution of the scatter rays over the sphere, I'm trying different depths and how many rays at once, and If I change it, it changes the way it looks.

I wonder you mention ray length and sample counts.

What actually matters to model some brdf is the distribution of ray directions.

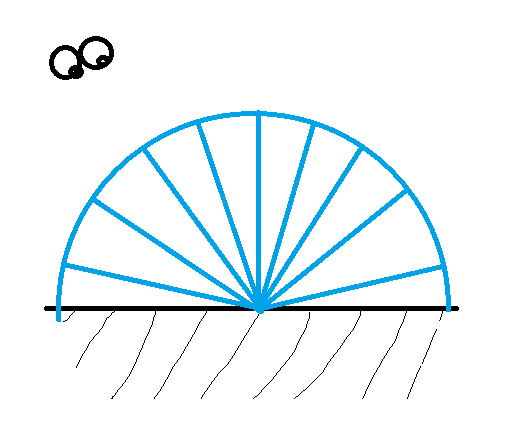

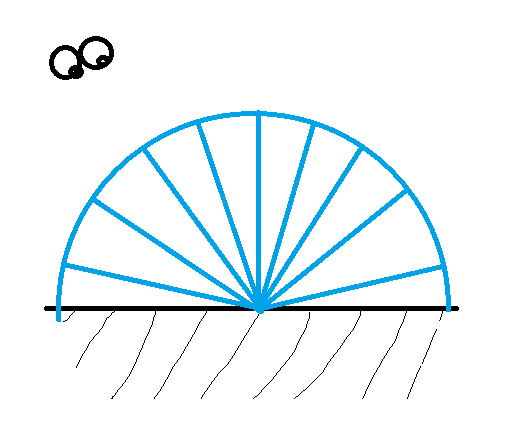

E.g., for a Lambert diffuse material, the distribution is spread out over the hemisphere, with a higher density on the normal direction and a lower density on the normal plane, as given by the cosine to the normal:

The blue rays would be a proper distribution of rays. So if we sum them all up, we get a good estimate.

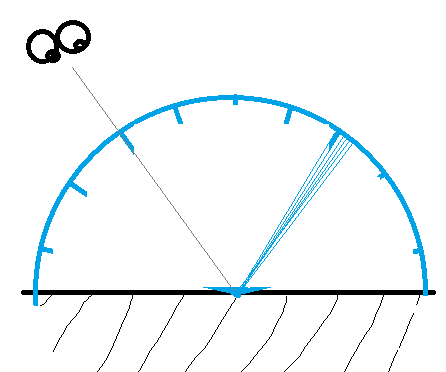

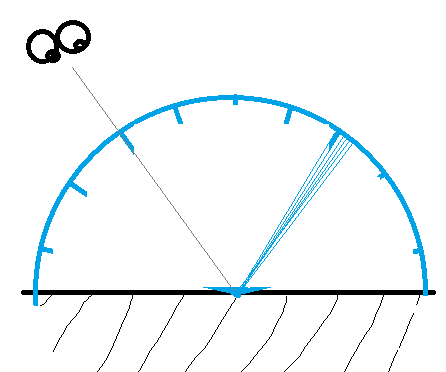

For the diffuse material it does not matter where the eye is, which changes for a mirror material, but i make it a little bit rough so it shows blurry reflections like a cooking pot:

Now they are pretty focused, all close to the eye reflection vector.

Again, summing those rays up will give us a good estimate.

So the hard part of modeling some brdf is to calculate such good distribution of rays.

Real world materials are complicated, and you can imagine how the fresnel effect goes from one of those two extremes to the other.

So maybe there is an easier way?

Yes. We can use a uniform distribution of rays, and then caclulate a weight depending on how well the current ray alignes to the dsitribution defined by the brdf. This is much easier.

But the problem is: If we look at my drawn examples, those ‘good’ rays all have a weight of one, so they are perfect samples. (it's ‘importance sampling'’)

But if we use a uniform distribution of rays to model the mirror material, almost all our rays will have a weight of zero, so it was a waste to trace them.

What people do in practice is usually a mix of the two. They choose a function which can generate random sample directions analytically easily, and this function should be pretty similar to the brdf.

Sorry if you knew all this already. Otherwise - that's how you get the most out of a limited number of rays.

MagnusWootton said:

so Ive pretty much hit the performance barrier now

You do not care about performance while you learn how ray tracing works. Or at least you shouldn't.

The primary problem is uncertainty. You're never sure if your stuff is correct.

So it helps a lot if you turn realtime off, and instead accumulate a whopping 4000 samples per pixel. This gives you a noise free image, good enough to draw some conclusions from it, good enough to compare with reference images.

And you also set up soem environment so you can see some relections at all. (You're litterally tapping in the dark.)

Then you can learn all this raytracing stuff. Personally i've used a Cornell Box scene to learn about path tracing. After that i tried the PBR standard material, which looks right but i'm not sure. Still it was fun and interesting, but not realtime. (Did it all on CPU)

Performance is the next step, including topics like:

Accepting samples from nearby pixels and past frames for an orders of magnitudes speedup. (Denoising)

Being smarter about which ‘random’ light to choose. (ReSTIR)

Integrate some upscaler. Quadruples your performance! \:O/ (But also, reduces your sample count by a factor of 4 - just don't tell anybody…)

Put some little crutches below your GPU to carry the heavy weight.

Jensen will love you for spreading the vision. But then he'll replace you with an AI bot.

That's really a lot of topics.

I'm almost happy about DXR being totally useless, since it can't handle LOD, so i don't have to learn all this. Sigh.

hehehe… well, probably you'll get used to my raytracing rant… ; )