First a brief description of what we're trying to achieve. We're basically trying for a single shard MMO that will accommodate all players on a giant planet, say 2000 km radius. In order to achieve this we will need .... well .... a giant plant. This presents a problem since the classical way to store worlds is triangles on disk and a world this big simply wouldn't fit on a players local computer. Therefore we won't generate world geometry on disk, we will instead define the majority of the terrain with functions and realize them into a voxel engine to get our meshes using a classic marching algorithm....... well actually a not so classic marching algorithm since it uses prisms instead of cubes. Our voxel engine will necessarily make use of an octrees to allow for the many levels of LOD and relatively low memory usage we need to achieve this.

The work so far......

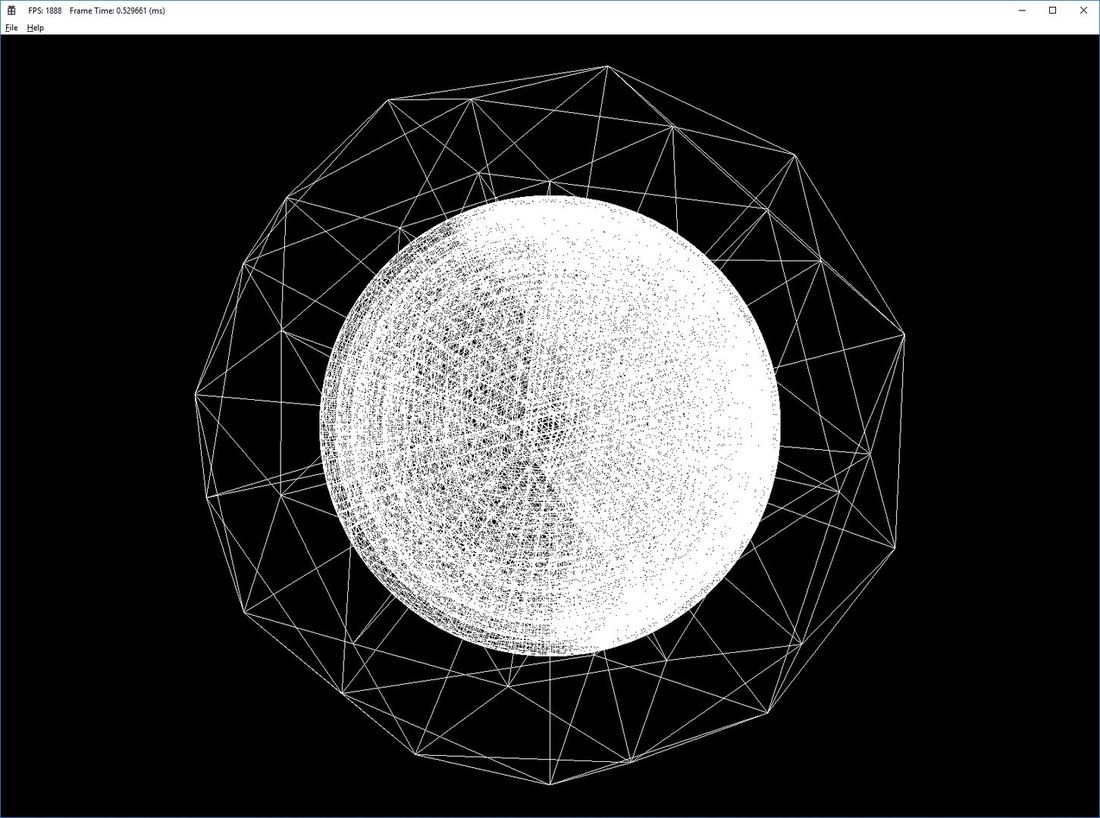

So below we have some test code that generates a sphere using the initial code of our Dragon Lands game engine.....

void CDLClient::InitTest2()

{

// Create virtual heap

m_pHeap = new(MDL_VHEAP_MAX, MDL_VHEAP_INIT, MDL_VHEAP_HASH_MAX) CDLVHeap();

CDLVHeap *pHeap = m_pHeap.Heap();

// Create the universe

m_pUniverse = new(pHeap) CDLUniverseObject(pHeap);

// Create the graphics interface

CDLDXWorldInterface *pInterface = new(pHeap) CDLDXWorldInterface(this);

// Create spherical world

double fRad = 4.0;

CDLValuatorSphere *pNV = new(pHeap) CDLValuatorSphere(fRad);

CDLSphereObjectView *pSO = new(pHeap) CDLSphereObjectView( pHeap, fRad, 1.1 , 10, 7, pNV );

//pSO->SetViewType(EDLViewType::Voxels);

pSO->SetGraphicsInterface(pInterface);

// Create an astral reference from the universe to the world and attach it to the universe

CDLReferenceAstral *pRef = new(pHeap) CDLReferenceAstral(m_pUniverse(),pSO);

m_pUniverse->PushReference(pRef);

// Create the camera

m_pCamera = new(pHeap) CDLCameraObject(pHeap, FDL_PI/4.0, this->GetWidth(), this->GetHeight());

m_pCamera->SetGraphicsInterface(pInterface);

// Create a world tracking reference from the unverse to the camera

double fMinDist = 0.0;

double fMaxDist = 32.0;

m_pBoom = new(pHeap) CDLReferenceFollow(m_pUniverse(),m_pCamera(),pSO,16.0,fMinDist,fMaxDist);

m_pUniverse->PushReference(m_pBoom());

// Set zoom speed in the client

this->SetZoom(fMinDist,fMaxDist,3.0);

// Create the god object (Build point for LOD calculations)

m_pGod = new(pHeap) CDLGodObject(pHeap);

// Create a reference for the god opbject and attach it to the universe

CDLReferenceDirected *pGodRef = new(pHeap) CDLReferenceDirected(m_pUniverse(), m_pGod());

pGodRef->SetPosition(CDLDblPoint(0.0, 0.0, -6.0), CDLDblVector(0.0, 0.0, 1.0), CDLDblVector(0.0, 1.0, 0.0));

m_pCamera->PushReference(pGodRef);

// Set the main camera and god object for the universe'

m_pUniverse->SetMainCamera(m_pCamera());

m_pUniverse->SetMainGod(m_pGod());

// Load and compile the vertex shader

CDLUString clVShaderName = L"VS_DLDX_Test.hlsl";

m_pVertexShader = new(pHeap) CDLDXShaderVertexPC(this,clVShaderName,false,0,1);

// Attach the Camera to the vertex shader

m_pVertexShader->UseConstantBuffer(0,static_cast<CDLDXConstantBuffer *>(m_pCamera->GetViewData()));

// Create the pixel shader

CDLUString clPShaderName = L"PS_DLDX_Test.hlsl";

m_pPixelShader = new(pHeap) CDLDXShaderPixelGeneral(this,clPShaderName,false,0,0);

// Create a rasterizer state and set to wireframe

m_pRasterizeState = new(pHeap) CDLDXRasterizerState(this);

m_pRasterizeState->ModifyState().FillMode = D3D11_FILL_WIREFRAME;

m_pUniverse()->InitFromMainCamera();

m_pUniverse()->WorldUpdateFromMainGod();

}And here's what it generates:

Ok so it looks pretty basic, but it actually is a lot more complex than it looks.,,,,

What it looks like is a subdivided icosahedron. This is is only partially true. The icosahedron is extruded to form 20 prisms. Then each each of those is subdivided to 8 sub-prisms and it's children are subdivided and so forth. Subdivision only takes place where there is function data (i.e. the function changes sign). This presents a big problem. How do we know where sign changes exist? Well we don't, so we have to go down the tree and look for them at the resolution we are currently displaying. Normally that would require us to build the trees all the way down just to look for sign changes, but since this is expensive we don't want to do it, so instead we do a "ghost walk". We build a lightweight tree structure which we use to find where data exists. We then use that structure to build the actual tree where voxels are needed. So I guess the obvious question is why is this any better than simply subdividing an icosohedron and perturbing the vertexes to get mountains, oceans and the like. The answer is this system will support full on 3D geometry. You can have caves, tunnels, overhangs, really whatever you can come up with. It's not just limited to height maps.

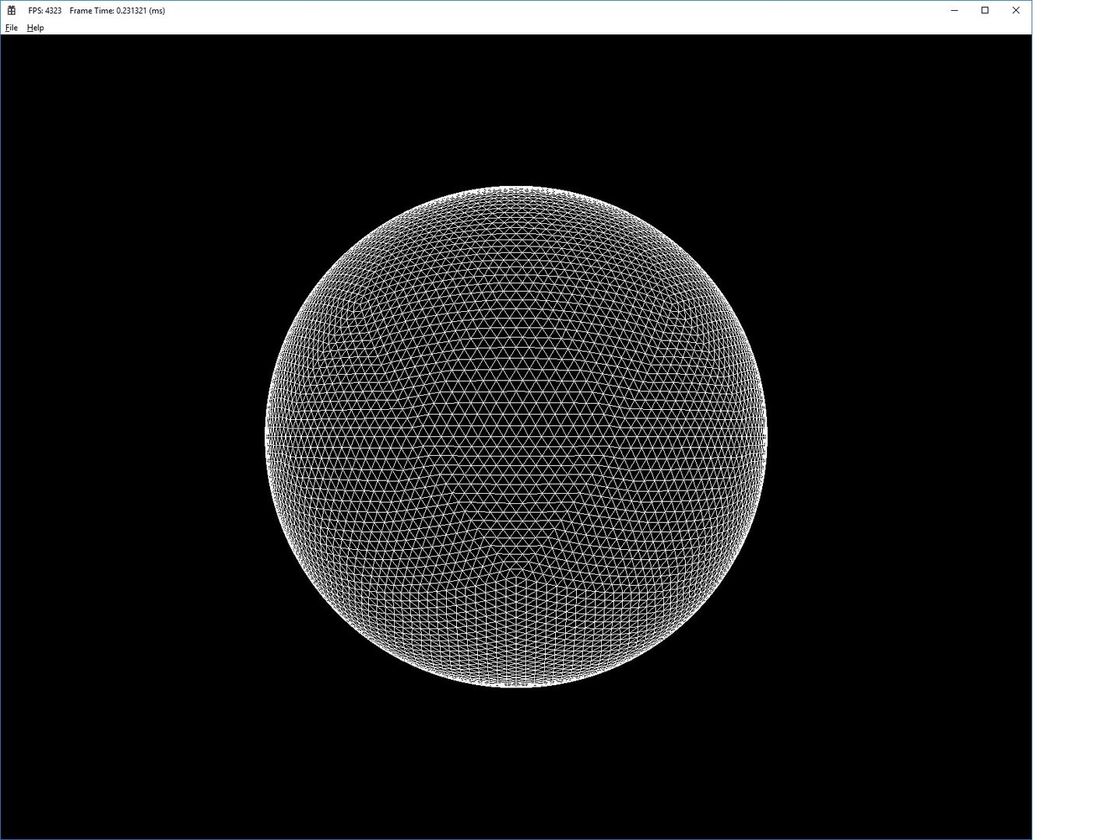

Here's what our octree (or rather 20 octrees) looks like:

Ok it's a bit odd looking but so far so good.

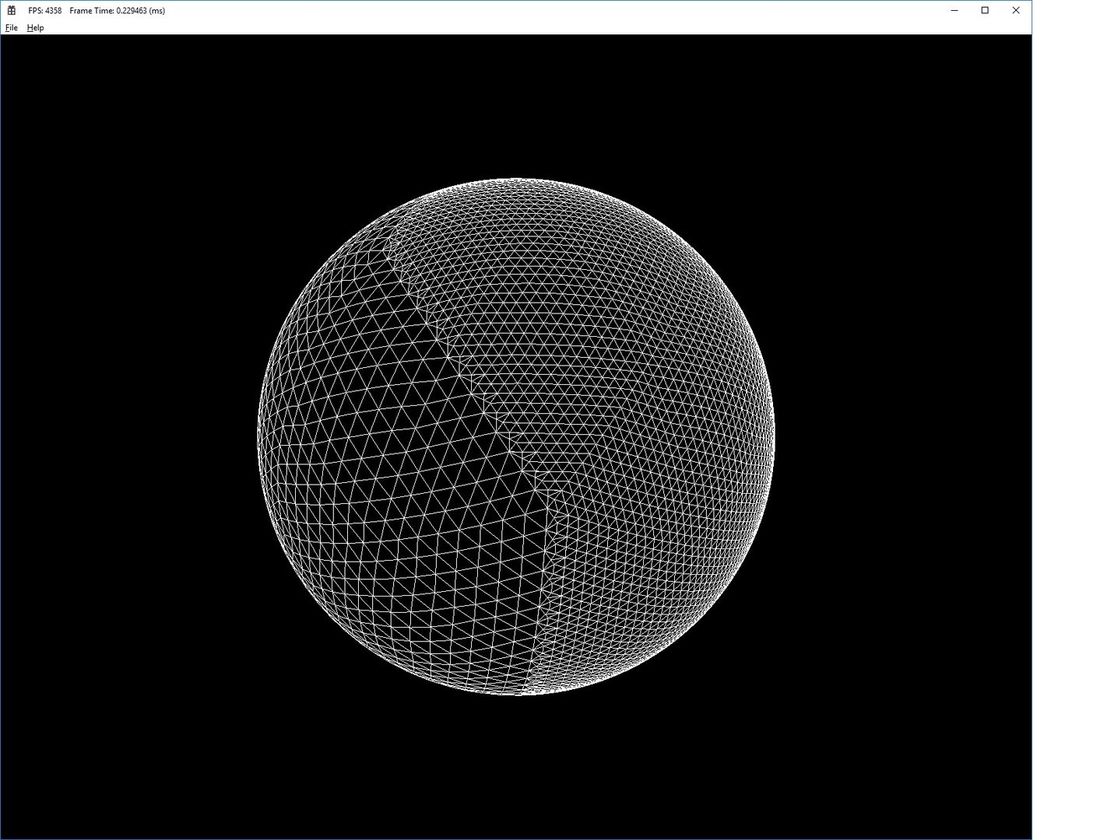

Now we come to the dreaded voxel LOD and .....gulp .... chunking. .... So as would be expected we need a higher density of voxels close to the camera and lower density father away. We implement this by defining chunks. Each chunk is a prism somewhere in the tree(s) an contains sub-prism voxels. Chunks father way from the camera are larger and those closer to the camera are smaller, the same as with the voxels they contain. In the following picture we set the god object (the object that determiners our point for LOD calculation) to a fixed point so we can turn the world around and look at the chunk transitions. However normally we would attach it to the camera or the player's character so all chunking and LOD would be based on a players perspective.

As you can see there are transitions from higher level chunks to lower level chunks and their corresponding voxels. In order to do this efficiently we divide the voxels in each chunk into two groups: center voxels and border voxels, and so each chunk can have two meshes that it builds. We do this so that if one chunk is subdivided into smaller chunks, we only need update the border mesh of neighboring chunks and so there is less work to be done and less data to send back down to the GPU. Actual voxel level transitions are done by special algorithms that handle each possible case. The whole transition code was probably the hardest thing to implement since there are a lot of possible cases.

What else.....

Oh yeah normals....... You can't see it with wire fame but we do generate normals. We basically walk around mesh vertexes in the standard way and add up the normals of the faces. There are a couple of complexities however. First we have to account for vertexes on along chunk boundaries, and second we wanted some way to support ridge lines, like you might find in a mountain range. So each edge in a mesh can be set to sharp which causes duplicate vertexes with different normals to be generated.

Guess that's it for now. We'll try to post more stuff when we have simplex noise in and can generate mountains and such. Also we have some ideas for trees and houses but we'll get to that later.

Sounds like an ambitious project!