I am using Sascha Willems' code ‘raytracingshadows’:

https://github.com/SaschaWillems/Vulkan/tree/master/examples/raytracingshadows

https://github.com/SaschaWillems/Vulkan/tree/master/data/shaders/glsl/raytracingshadows

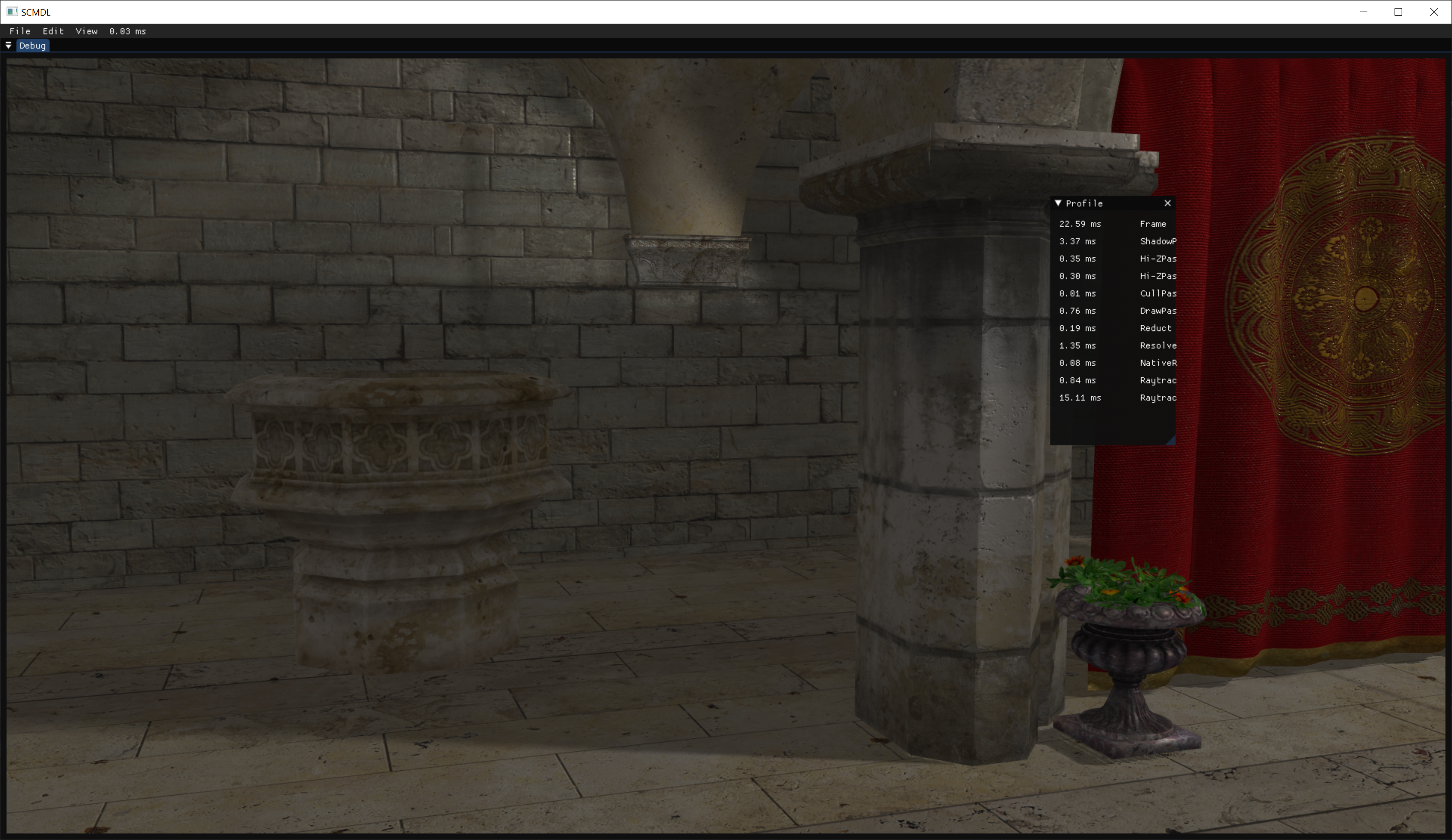

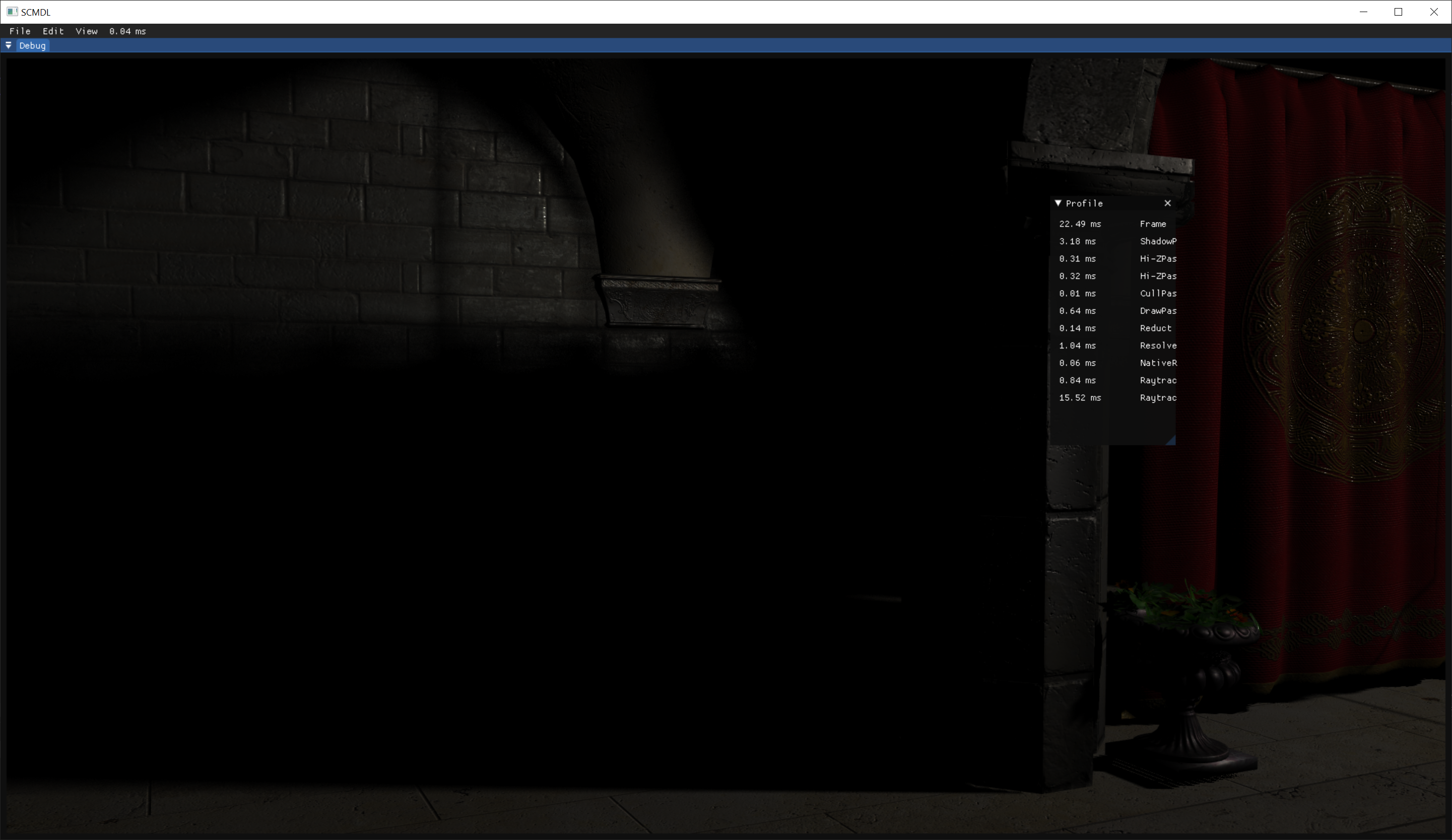

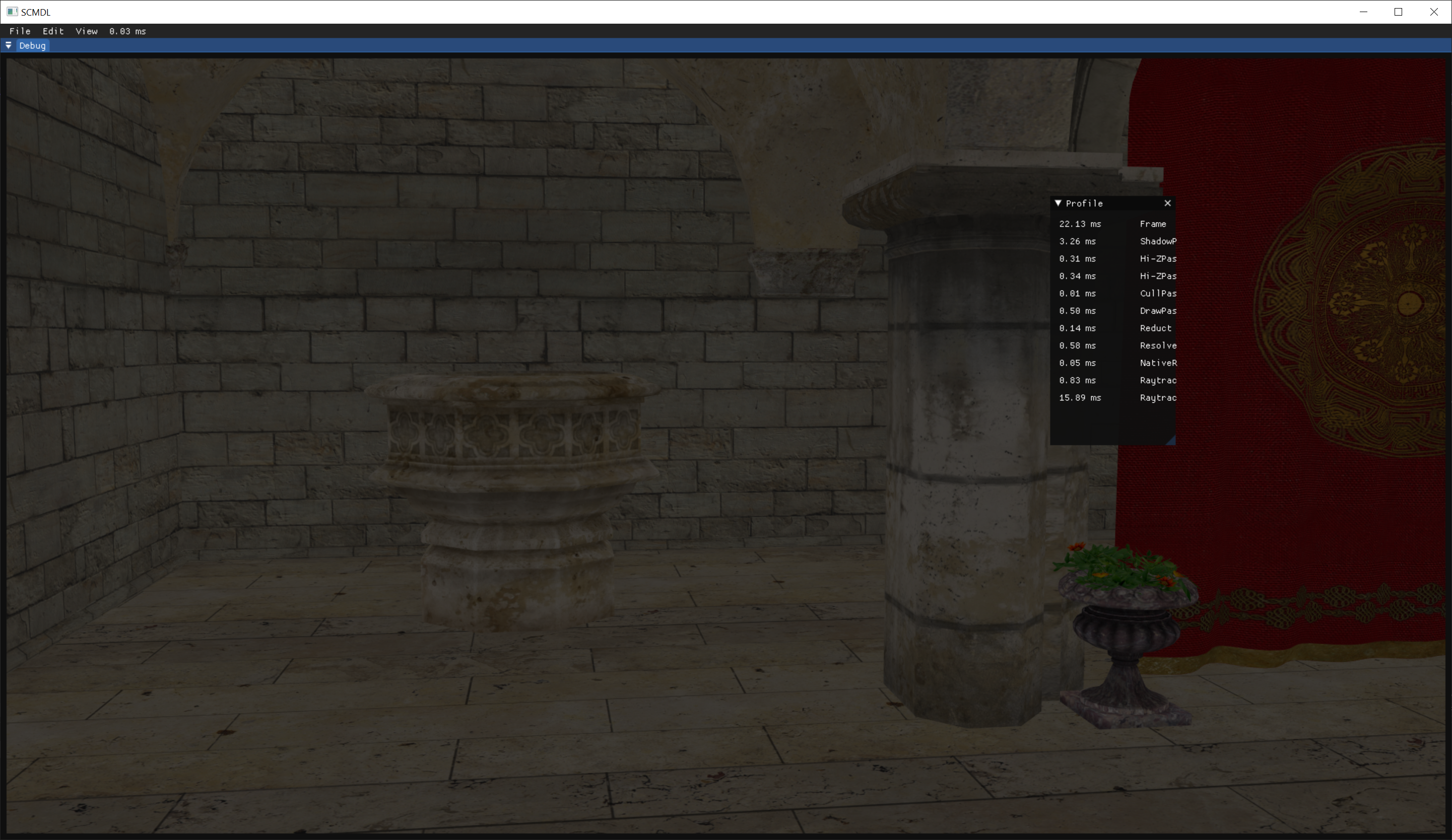

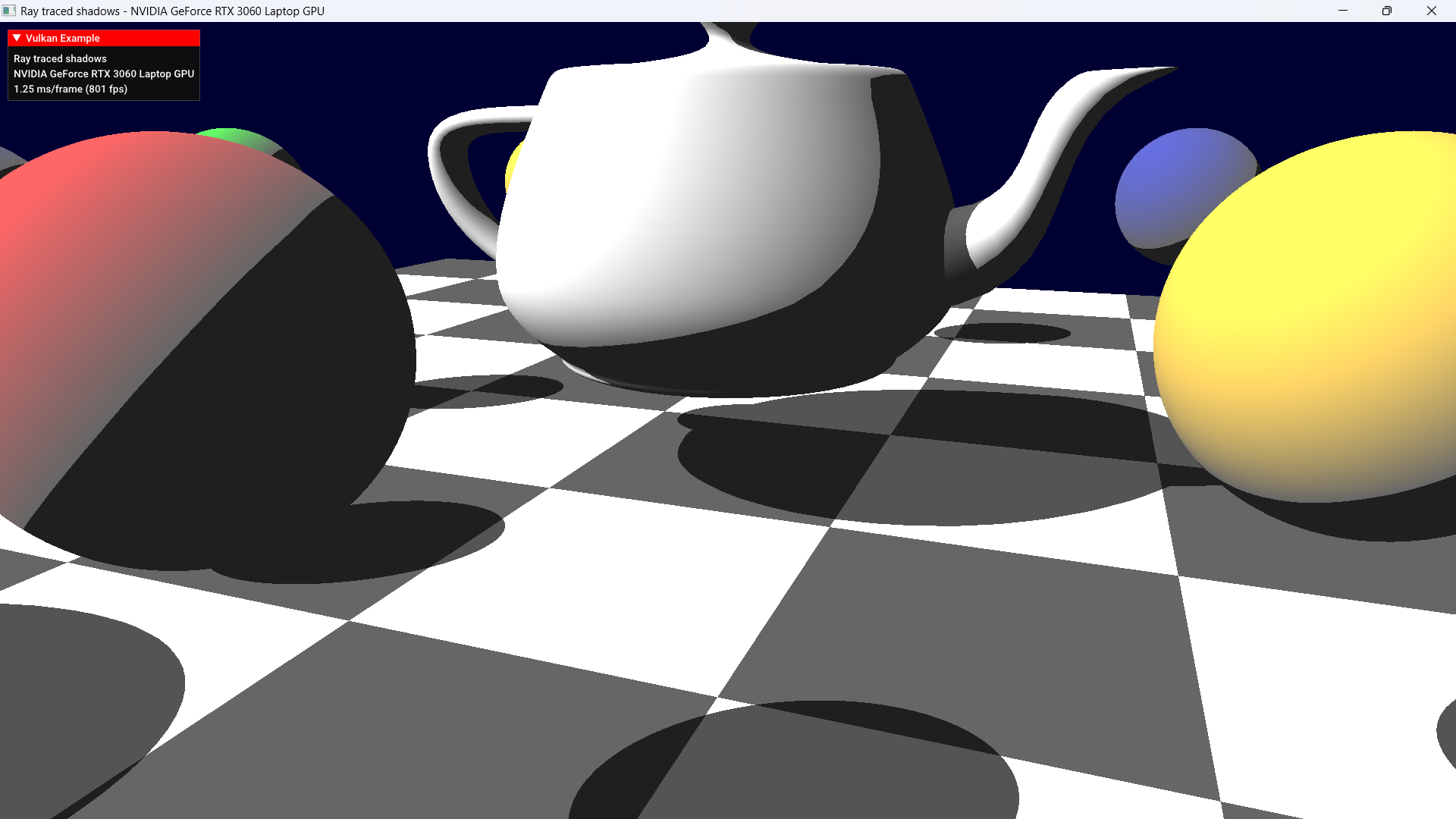

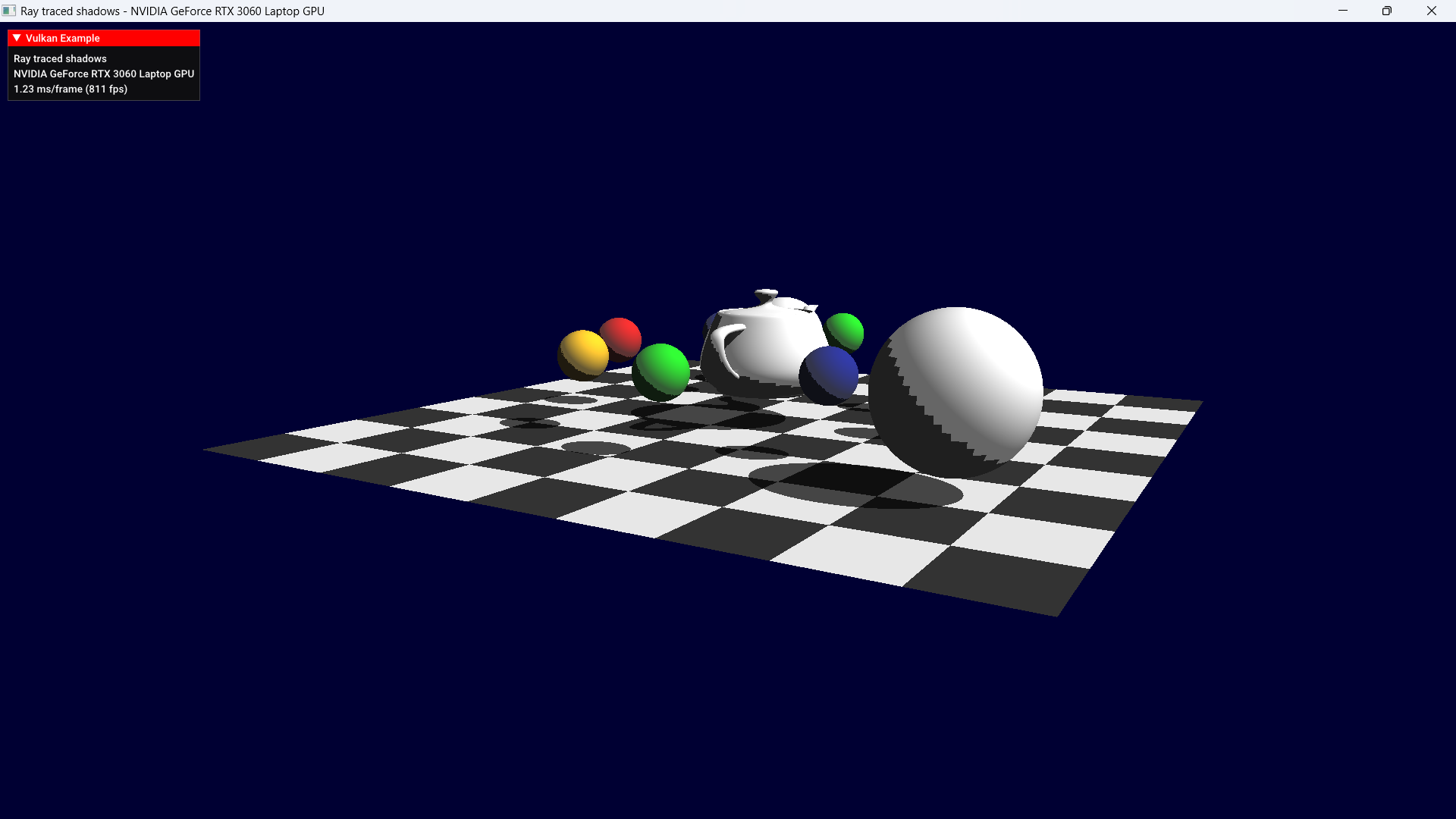

Using the reflectionscene.glft file that I downloaded using download_assets.py, it becomes immediately obvious that, for self-shadowed triangles, the shadow detail is based on the geometry detail of the mesh. This is why you get big squares using this scene (the spheres and teapot are basically quad-based).

I found some answers on reddit, and so I changed the closesthit shader to the following:

...

vec3 lightVector = normalize(ubo.lightPos.xyz);

float dot_product = max(dot(lightVector, normal), 0.0);

hitValue = v0.color.rgb * dot_product + vec3(0.2, 0.2, 0.2);

// Shadow casting

float tmin = 0.001;

float tmax = 10000.0;

vec3 origin = gl_WorldRayOriginEXT + gl_WorldRayDirectionEXT * gl_HitTEXT;

vec3 biased_origin = origin + normal * 0.01;

shadowed = true;

// Trace shadow ray and offset indices to match shadow hit/miss shader group indices

traceRayEXT(topLevelAS, gl_RayFlagsTerminateOnFirstHitEXT | gl_RayFlagsOpaqueEXT | gl_RayFlagsSkipClosestHitShaderEXT, 0xFF, 0, 0, 1, biased_origin, tmin, lightVector, tmax, 2);

if (shadowed)

{

hitValue *= 0.3;

}

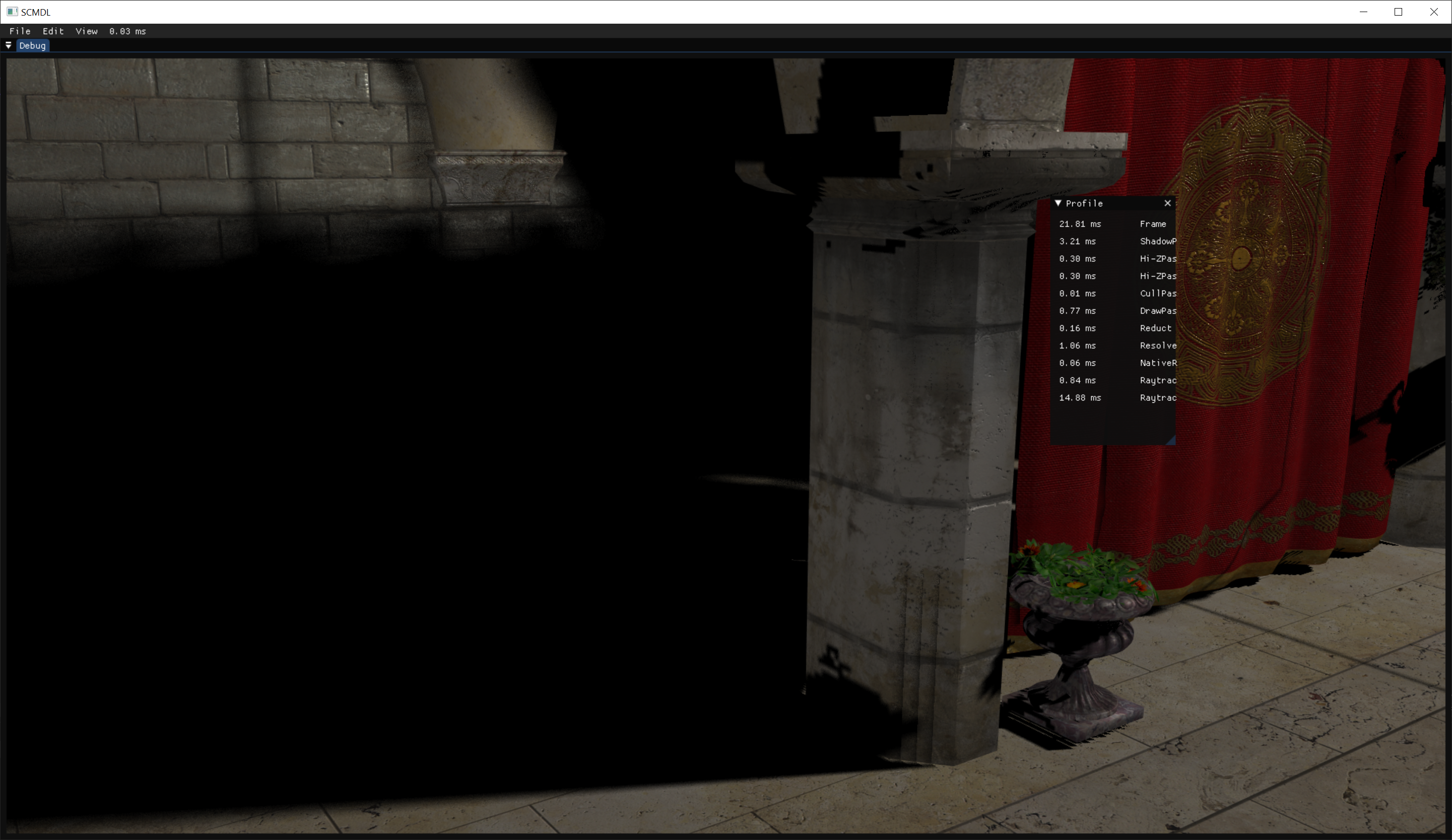

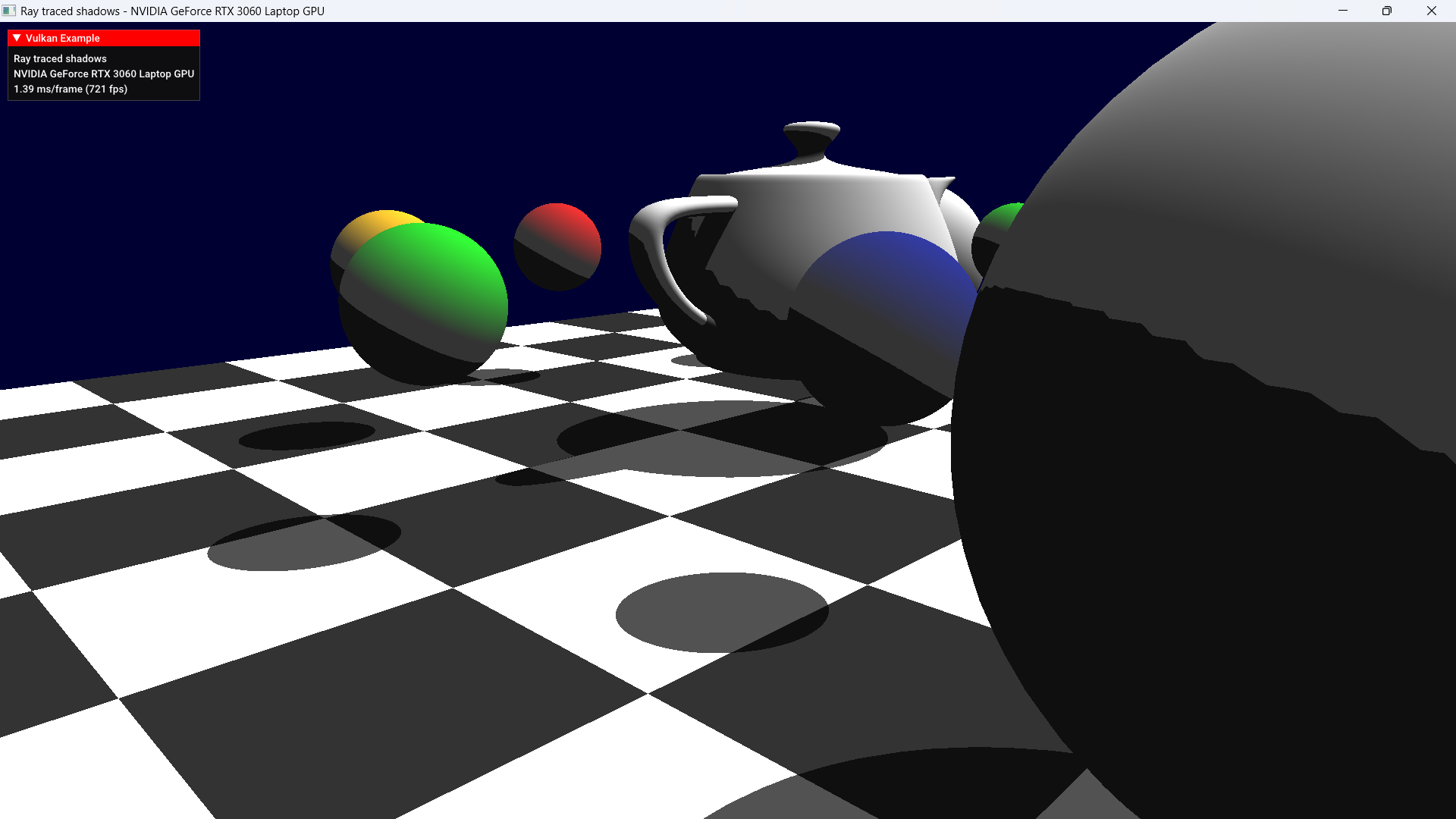

....It looks moderately better, but not perfect:

Any ideas on how to really fix the problem? I was expecting pixel-perfect shadows like on the checkerboard, but no.