Hehe, 2010: ‘Turn banding into noise!’ 2020: ‘Turn noise into blotches!’ :D

But looks great. Can you coarsely tell which tasks take what time?

Using timestamps to profile GPU works pretty well, btw.

Hehe, 2010: ‘Turn banding into noise!’ 2020: ‘Turn noise into blotches!’ :D

But looks great. Can you coarsely tell which tasks take what time?

Using timestamps to profile GPU works pretty well, btw.

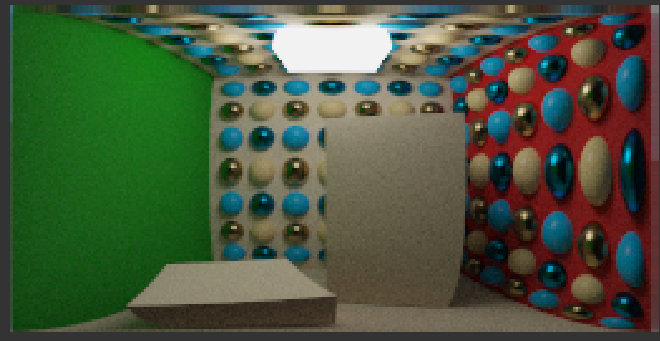

Yeah, that's at 25 samples. If I do 1000 samples, it looks wayyyyyy nicer, especially after the noise reduction.

Thanks for your help again.

Fake DOF using blur?

Not doing it proper using RT?

At least for a reference image?

Thinking of it, i guess RT DOF and MB might break any denoiser out there… : )

Btw, one fun thing i did with PT was to play with non planar projections:

In this image you have a fov of 180, so you can see enemies beside you, and you can see your own feet for better jumping.

I tried to focus the distortion on the edges, so looking straight still feels pretty normal.

But at one image each minute, i could not really tell if it would be fun to play. : )

JoeJ said:

Fake DOF using blur?

Not doing it proper using RT?

At least for a reference image?Thinking of it, i guess RT DOF and MB might break any denoiser out there… : )

How do I do it in the ray tracer? I was going to render to an image, then blur that, then apply it, using compute shaders. MB? I'm still a newb, don't forget. :) Thanks again for your insight, man.

JoeJ said:

Btw, one fun thing i did with PT was to play with non planar projections:

…

Awesome stuff man. Mine runs at one frame per second. LOL

Oh yes, it also appears that Intel Open Denoise library antialiases the output image. That's pretty cool!

taby said:

How do I do it in the ray tracer?

The same way you do AA.

For AA, you generate a random (primary) ray within the square of a pixel, then you accumulate many such samples.

The goal is to integrate the whole area of the pixel, not just a point sample in the middle, which would give aliasing like rasterization.

For DOF you do the same technically, but you want a larger area than a single pixel to get blur.

To figure out the match, you can study how camera lenses work. This gives the math on how to set up the projection (could be planar like games, or spherical fisheye like a gopro camera, but usually some thing in between…), and also how focus settings affect at which range of distance the image is sharp (sensor pixel hit by almost parallel rays from the right distance) or blurry (rays spread to a cone because the hit distance is out of focus).

Surely RT guys have some standard formulas which are easy to find, i guess.

I would research this. Because if you do it wrong, a large outdoor scene can look like small diorama model on a table, which is some unexpected problem to us but also interesting.

Hmm. Thank you. I googled it and found some promising tutorials and papers. I owned GPU Gems 1 and 2 at some time. :)

I am getting like 8 frames per second (without fog) on my 3060. If I start shooting out random rays, that rate will drop considerably. I am desperate to get compute shaders working, and so I think that I'll try the old blur method based on distance.